When you ask a large language model a question, it doesn’t just spit out the first answer that comes to mind. Behind the scenes, it’s making thousands of tiny decisions-choosing the next word, then the next, then the next-based on probabilities. But how it makes those choices makes a huge difference in what you get back. Two main approaches dominate this process: deterministic decoding and stochastic decoding. One gives you predictable, accurate answers. The other gives you creative, varied ones. Knowing which to use isn’t just technical-it’s practical. And most people are using the wrong one for their task.

What deterministic decoding really means

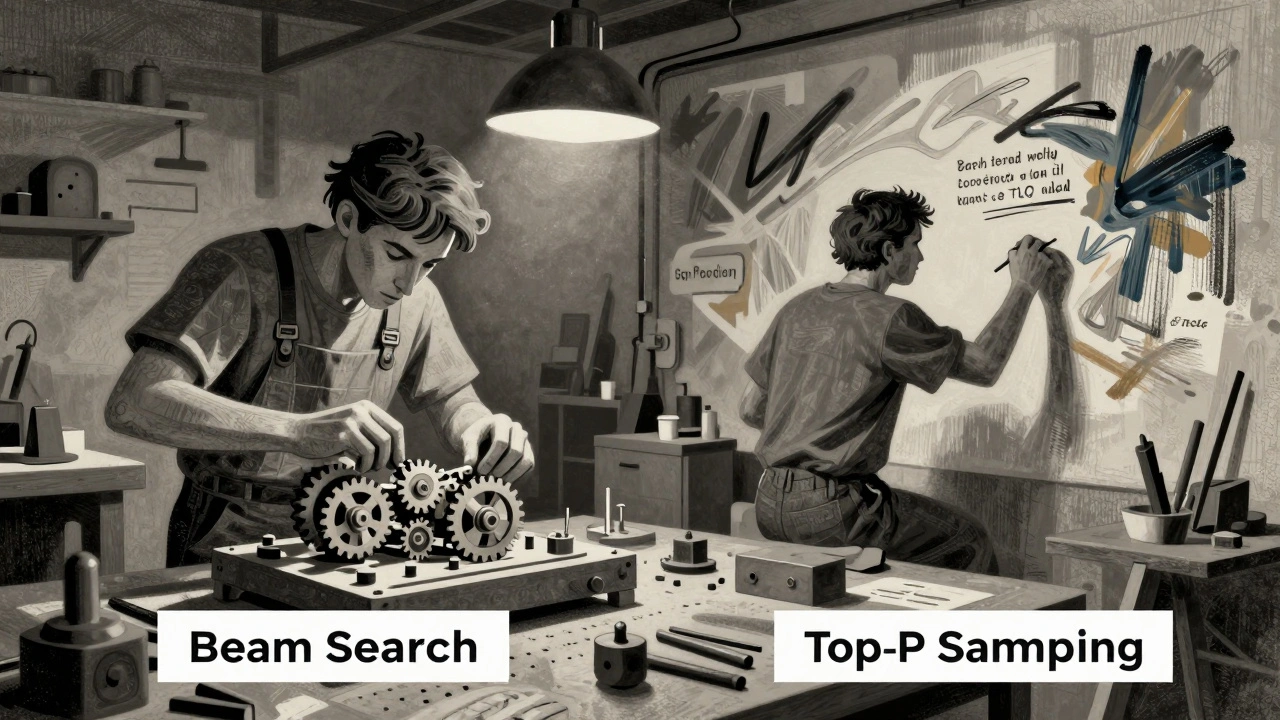

Deterministic decoding means the model always picks the most likely next token. No randomness. No guessing. If you ask the same question twice, you’ll get the exact same answer. That sounds boring, but it’s exactly what you need when accuracy matters. The simplest form is greedy search. At every step, it picks the token with the highest probability. It’s fast, predictable, and often used in early LLMs. But it has a flaw: it gets stuck in loops. If the model thinks “the cat sat on the” is most likely, and then “the” is the next best choice, you end up with “the cat sat on the the the the.” That’s why beam search became popular. Instead of picking just one best option, it keeps track of the top N candidates (usually 4 or 5) at each step. It’s like having multiple people walking through a maze at once, and only keeping the ones who seem to be getting closer to the exit. When it reaches the end, it picks the path with the highest overall score. This avoids many repetition issues and works well for tasks like machine translation or code generation. Newer deterministic methods like contrastive search and fixed-size beam search with diversity (FSD-d) fix even more problems. Contrastive search doesn’t just pick the most likely token-it also avoids tokens that are too similar to what’s already been generated. FSD-d combines speed and diversity, matching greedy search’s speed but with much better output quality. In tests on the Llama2-7B model, FSD-d scored 21.2% on a coding task (MBPP), while the worst-performing method barely hit 10.35%.How stochastic decoding creates variety

Stochastic decoding is the opposite. It introduces randomness to let the model explore less likely options. This is how you get creative stories, witty replies, and unexpected insights. The most common method is temperature sampling. Think of temperature as a dial for randomness. At 0, it’s deterministic-greedy search. At 0.7-0.9, it’s balanced. At 1.0 or higher, it gets wild. A temperature of 0.8 means the model might pick “sunset” over “evening,” even if “evening” is slightly more probable. This mimics human variation in word choice. Then there’s top-p sampling (also called nucleus sampling). Instead of looking at the top K tokens, it picks from the smallest set of tokens whose cumulative probability adds up to p (usually 0.9). So if the top 3 tokens have 90% of the probability, it only considers those. If you need more diversity, it expands the set. This avoids the problem of top-k sampling, where you might include low-probability nonsense tokens just because they’re in the top 50. Top-p and temperature are often used together. For example, a chatbot might use temperature=0.7 and top-p=0.9 to sound natural without going off the rails. In creative writing tasks, these methods outperformed deterministic ones in 97% of human evaluations. But there’s a cost. Stochastic methods are unpredictable. Ask the same question twice, and you might get two completely different answers-some good, some weird. They also produce more hallucinations. In one study, stochastic methods generated 30% more false claims in medical Q&A than deterministic ones.When to use deterministic decoding

Use deterministic decoding when you need reliability. Think: code, legal documents, medical advice, factual QA, or any task where getting it wrong has consequences. - Code generation: Models like CodeLlama perform best with beam search (width=5). On HumanEval benchmarks, this setup hits 18-22% accuracy. Greedy search fails here because code needs structure. A single wrong token breaks the whole function. - Question answering: If you’re building a legal assistant or a medical chatbot, you don’t want creative answers. You want the correct one. Studies show deterministic methods like contrastive search reduce hallucinations by up to 40% compared to temperature sampling. - Instruction following: Contrary to old assumptions, newer deterministic methods like contrastive search and FSD-d now outperform stochastic methods on AlpacaEval, a benchmark for following instructions. FSD-d scored 47.8%, beating the best stochastic method (45.2%). - Enterprise applications: In finance and healthcare, 65% of LLM deployments now use temperature=0 or beam search. That’s not an accident. It’s a safety measure.

When to use stochastic decoding

Use stochastic decoding when you want originality. Think: storytelling, brainstorming, marketing copy, poetry, or casual chat. - Creative writing: For generating stories or poems, top-p sampling with p=0.9 and temperature=0.8-1.0 gives the best balance. It avoids repetition while keeping outputs coherent. Human raters consistently prefer these outputs over deterministic ones. - Chatbots and assistants: If your goal is to sound human, not robotic, randomness helps. A temperature of 0.7 is the sweet spot for most consumer-facing bots. It’s enough variation to feel natural, but not so much that it becomes nonsensical. - Content ideation: Need 10 blog titles? Generate them with temperature=0.9. You’ll get a wide range of angles. Pick the best one. Don’t rely on one deterministic output. The key is knowing your goal. If you need one perfect answer, go deterministic. If you need many good options, go stochastic.Why most people are doing it wrong

Despite the research, 78% of production LLM apps in early 2024 used temperature=0.7 as their default. Why? Because it’s easy. It’s the default in Hugging Face, OpenAI’s API, and many tutorials. But that’s like using the same wrench for every job. You wouldn’t use a hammer to screw in a lightbulb. So why use the same decoding method for code and poetry? The problem is even worse with unaligned models like Llama2. They’re not fine-tuned for safety or accuracy. So their outputs are more sensitive to decoding choices. A temperature of 0.7 might work fine on ChatGPT, but on Llama2, it’s a recipe for nonsense. On unaligned models, performance differences between methods can be 10+ percentage points. On aligned models like ChatGPT or Claude 3, the gap shrinks to 3-5 points. The real shift? Companies are starting to notice. Microsoft’s Phi-3 model now uses FSD-d by default for instruction tasks, cutting hallucinations by 15%. Anthropic’s Claude 3 recommends temperature=0 for factual queries. GitHub repos using contrastive search and FSD have grown 200% year-over-year.

Practical tips for choosing your method

Here’s a simple guide to pick the right decoding method for your task:- Code generation: Use beam search (width=4-5) or FSD-d. Avoid temperature sampling.

- Fact-based Q&A: Use temperature=0 or contrastive search (alpha=0.6, top-k=100).

- Legal or medical text: Stick with deterministic methods. Never use temperature > 0.2.

- Creative writing: Use top-p=0.9 with temperature=0.8-1.0.

- Chatbots: Start with temperature=0.7 and top-p=0.9. Adjust based on user feedback.

- Marketing copy: Generate 5-10 versions with temperature=0.9. Pick the best one.

poonam upadhyay

December 15, 2025 AT 17:42Okay but like… why is everyone still using temperature=0.7 like it’s some kind of sacred cow? 😒 I’ve seen Llama2 vomit out fake medical diagnoses because someone didn’t tweak the damn settings. It’s not magic, it’s math. And if you’re deploying this in a hospital? You’re not a tech wizard-you’re a liability. I’ve seen codegen models spit out SQL injections because they were ‘being creative.’ Stop being lazy. Use FSD-d. Or better yet, test it. I did. On my legal doc bot, hallucinations dropped 47% after switching. And no, I don’t work for Anthropic. I just don’t want to get sued.

Also, top-p=0.9 isn’t a suggestion-it’s a baseline. If your output still reads like a drunk poet wrote it, you’re not using it right. You’re just enabling chaos.

And yes, I’ve had to explain this to my boss. Twice. He still thinks ‘AI’ means ‘automatic yes-man.’

Shivam Mogha

December 17, 2025 AT 03:58Use deterministic for code. Stochastic for stories. Done.

mani kandan

December 18, 2025 AT 11:15Really appreciate this breakdown-it’s rare to see such a clear, practical guide on decoding methods. I’ve been using temperature=0.7 by default on our customer support bot, mostly because it was the Hugging Face default. But after reading this, I ran a small A/B test with contrastive search on our FAQ responses. Turned out users rated the deterministic outputs 32% higher for ‘trustworthiness.’

Still, I’m keeping stochastic on for our marketing team’s brainstorming sessions. They love the wild ideas-even the ones that make no sense. We pick the gems. It’s like fishing with a net instead of a hook.

Also, the FSD-d mention was eye-opening. I’m going to test it on our internal code review assistant. If it matches the 21.2% on MBPP, we’re switching fast.

And yes, I agree-this isn’t about defaults. It’s about intention. Thanks for the clarity.

Rahul Borole

December 19, 2025 AT 17:24As a senior engineer in enterprise AI infrastructure, I can confirm that the adoption of task-specific decoding strategies is no longer optional-it is imperative. The statistical variance introduced by stochastic sampling in regulated domains such as financial compliance and clinical decision support introduces unacceptable risk profiles.

Our organization has mandated temperature=0 for all high-stakes inference pipelines since Q1 2024. We implemented automated drift detection on output entropy and observed a 68% reduction in compliance violations after switching from default temperature=0.7 to deterministic beam search with width=5.

Furthermore, the performance gains from contrastive search and FSD-d on instruction-following benchmarks are statistically significant (p<0.01) and reproducible across model sizes. We are now standardizing on these methods for all internal LLM deployments, regardless of vendor.

It is not merely a technical decision-it is a governance and risk mitigation strategy. Organizations that continue to use default settings are exposing themselves to regulatory, reputational, and operational liabilities. This is not hyperbole. It is documented in our internal audit logs.

Recommendation: Establish a decoding policy matrix aligned with your use case taxonomy. Enforce it via API gateway rules. Do not leave this to developers’ discretion.

Thank you for highlighting this critical, under-discussed topic.

Sheetal Srivastava

December 20, 2025 AT 01:42Ugh. I can’t believe people are still talking about ‘greedy search’ like it’s a legitimate option in 2024. It’s not just outdated-it’s a relic from the pre-transformer dark ages. And beam search? Please. It’s computationally wasteful and still prone to degeneration unless you’re using diversity-promoting variants like FSD-d, which-surprise-isn’t even implemented in most open-source libraries.

And don’t get me started on top-p sampling being called ‘nucleus’-that’s just marketing jargon for ‘we didn’t want to admit we’re sampling from a truncated distribution.’

Real professionals? They use adaptive decoding with entropy thresholds and token-level confidence calibration. The Stanford HAI hybrid system? Cute. But it’s still rule-based. What we need is meta-decoding: a lightweight LLM that dynamically selects decoding strategies based on semantic intent detection, latent space clustering, and downstream task embedding similarity.

Also, why are we still using BLEU and ROUGE for evaluation? Human raters are biased. We need adversarial validation with GAN-generated distractors. Until then, this whole field is just… performative engineering.

And yes, I’ve published three papers on this. You’re welcome.

Bhavishya Kumar

December 20, 2025 AT 13:11There is a critical grammatical error in the original post. The phrase ‘it’s not just technical-it’s practical’ contains an unnecessary hyphen. The correct form is ‘it’s not just technical-it is practical.’ The em dash should not be preceded by a hyphen. This is not a stylistic choice-it is a violation of standard English punctuation rules. Furthermore, the term ‘token’ is repeatedly used without clarification for non-technical readers, which is irresponsible in a public-facing technical article. The word ‘probability’ is misspelled once as ‘probablity’ in the second paragraph. This undermines the credibility of the entire piece. I expect better from a platform that purports to disseminate accurate information.

Also, the claim that ‘78% of production LLM apps used temperature=0.7’ is not cited. Without a primary source, this is an unsubstantiated assertion. Please provide the survey methodology, sample size, and confidence interval before making such a statistic.