Black Seed USA AI Hub

Architectural Innovations That Improved Transformer-Based Large Language Models Since 2017

Since 2017, transformer-based language models have evolved through key architectural changes like RoPE, SwiGLU, and pre-normalization. These innovations improved context handling, training stability, and efficiency-making modern AI models faster, smarter, and more scalable.

Incident Response Playbooks for LLM Security Breaches: How to Stop Prompt Injection, Data Leaks, and Harmful Outputs

LLM security breaches require specialized response plans. Learn how prompt injection, data leaks, and harmful outputs are handled with incident response playbooks built for AI systems - not traditional IT.

Token Probability Distributions in Large Language Models: How Next-Word Prediction Works

Token probability distributions determine how language models choose the next word. Learn how softmax, temperature, top-k, and top-p sampling shape AI-generated text - and why understanding them gives you real control over AI behavior.

Retention and Deletion Policies for LLM Prompts and Logs: What You Need to Know

LLM prompt and log retention policies are critical for compliance and privacy. Learn how data is truly deleted, why retention periods are longer than you think, and what steps to take now to avoid regulatory fines and data leaks.

Why Generative AI Hallucinates: The Hidden Flaws in Probabilistic Language Models

Generative AI hallucinates because it predicts text based on patterns, not truth. It doesn't understand facts-it just repeats what it's seen. This is why it invents fake citations, medical facts, and court cases with perfect confidence.

Code Quality, Maintainability, and Technical Debt in Vibe Coding

Vibe coding speeds up development with AI, but without careful review, it leads to poor code quality, high technical debt, and unmaintainable systems. Learn how to use AI-assisted coding without trapping yourself in a maintenance nightmare.

Few-Shot Prompting Patterns That Boost Accuracy in Large Language Models

Few-shot prompting improves LLM accuracy by 15-40% using just 2-8 examples. Learn the top patterns that work, where to apply them, and how to avoid common mistakes.

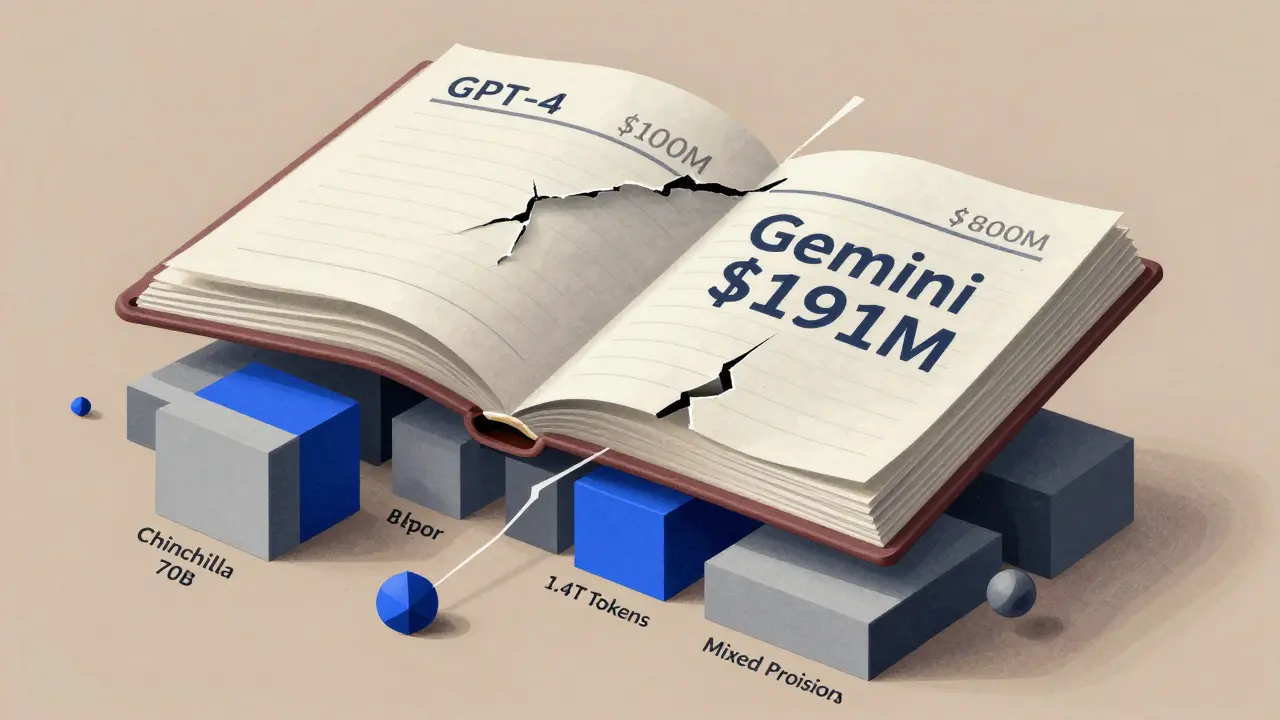

Cost-Optimal Training for LLMs: How to Balance Training and Inference Compute

Learn how to train LLMs at a fraction of the cost by balancing model size and training data. Discover why bigger isn't better and how Chinchilla changed everything.

Allocating LLM Costs Across Teams: Chargeback Models That Actually Work

Learn how to allocate LLM costs fairly across teams using proven chargeback models that track tokens, retrievals, and agent loops - not just guesswork. Stop overpaying and start optimizing.

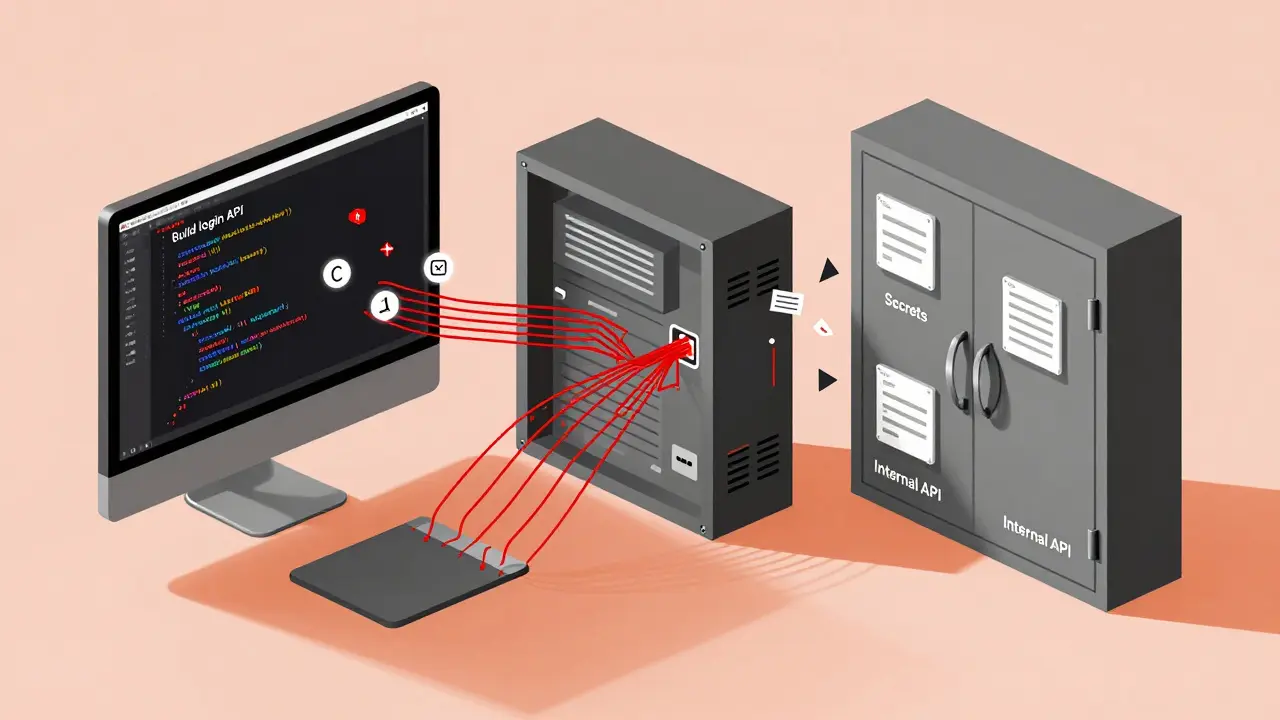

Telemetry and Privacy in Vibe Coding Tools: What Data Leaves Your Repo

Vibe coding tools like Claude Code and Cursor generate code from prompts-but they also collect telemetry. Learn what data leaves your repo, how privacy settings vary between tools, and how to protect your secrets.

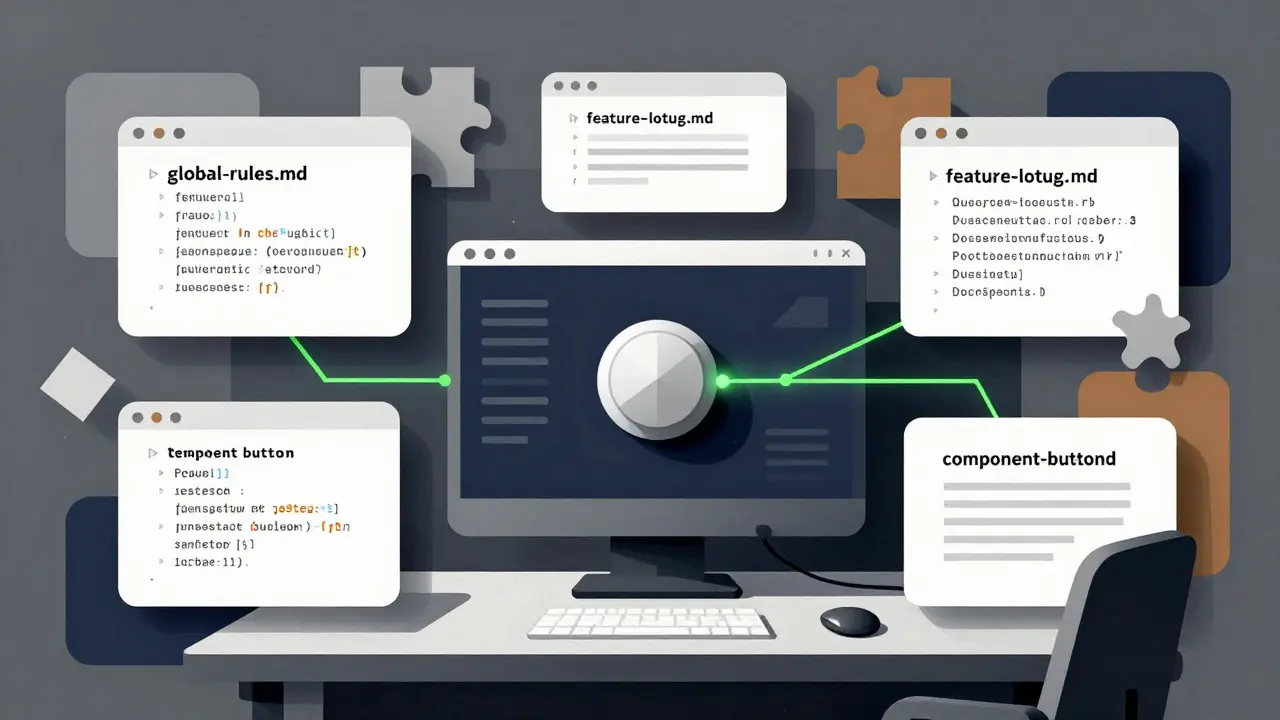

How Inline Code Context Makes Vibe Coding Accurate and Reliable

Inline code context transforms vibe coding from guesswork into precision. By providing structured rules before prompting, teams cut revisions by 73%, reduce bugs by 63%, and ship features 5.8x faster.

Evaluation Gates and Launch Readiness for Large Language Model Features: What You Need to Know

Evaluation gates are mandatory checkpoints that ensure LLM features are safe, accurate, and reliable before launch. Learn how top AI companies test models, what metrics matter, and why these processes are becoming non-negotiable.