Stop throwing everything at the model and hoping for the best. If you’ve ever spent an hour tweaking a prompt only to get garbage code back, you’re not broken - you’re just using vibe coding the wrong way. The truth is, context layering isn’t a fancy trick. It’s the only way to make AI coding reliable at scale. And it starts with one simple rule: Feed the model before you ask.

Early vibe coding was exciting. You’d type something like, “Build a login API in Python,” and the AI would spit out code. Sometimes it worked. Sometimes it didn’t. But when it didn’t, you had no idea why. Was the prompt too vague? Did the model forget the database schema? Was it confused by that one comment you left in the code? The problem wasn’t the AI. It was the chaos. You were dumping a hundred files, random docs, and half-baked ideas into the context window and expecting magic.

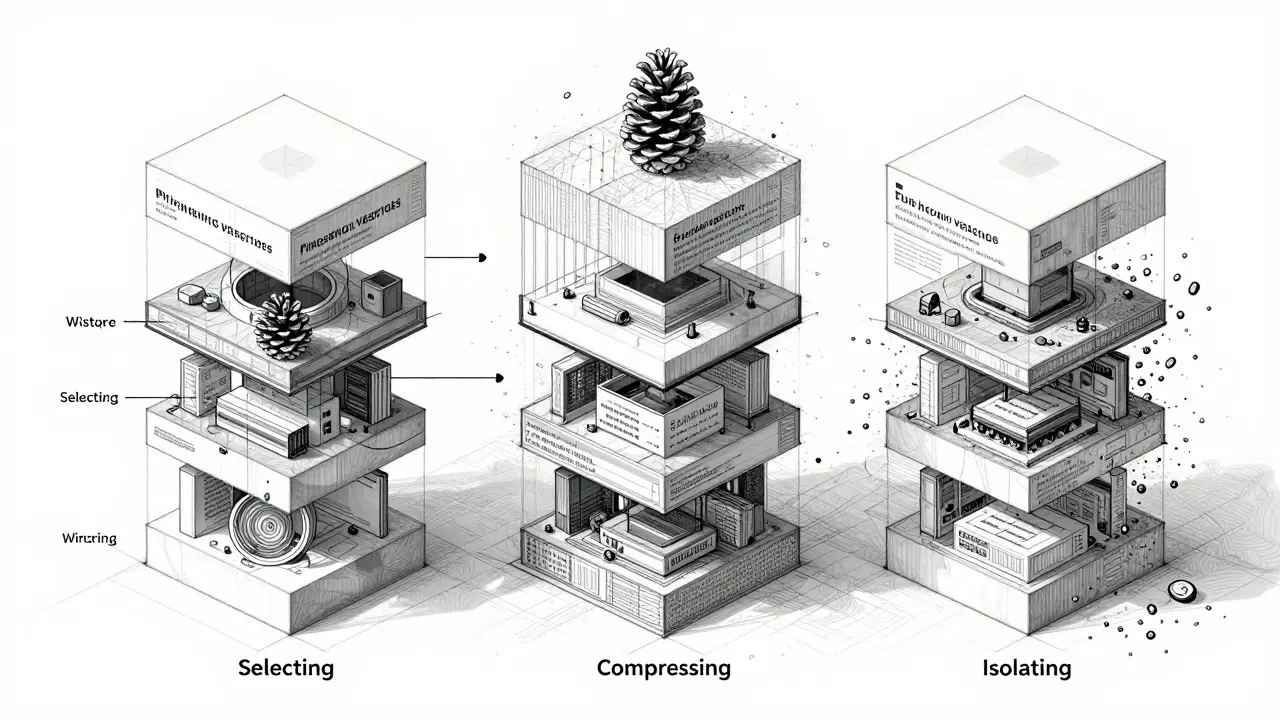

What Context Layering Actually Is

Context layering is the structured way of feeding information to an AI before you ask it to do anything. Think of it like preparing a kitchen before cooking. You don’t throw all your ingredients, pots, and spices on the counter and yell, “Make me dinner.” You organize. You gather. You prep. Context layering does the same for code.

It’s not about writing better prompts. It’s about building better information pipelines. Instead of one giant blob of text, you break your input into layers - like an onion. Each layer adds precision without overwhelming the model. This approach was formalized in late 2025 as context engineering, a term backed by research from LangChain, Anthropic, and Sequoia. The numbers don’t lie: pure vibe coding works about 35-40% of the time on complex tasks. Context layering? 75-80%.

The Four Pillars of Context Engineering

According to Cole Medin’s November 2025 breakdown, context layering rests on four pillars. Ignore any one, and your system will break.

- Writing Context: Create persistent stores of reusable information. This isn’t just copying code. It’s storing architecture diagrams, API contracts, environment variables, and even team coding standards. Tools like Pinecone is a vector database used for retrieving relevant code snippets and documentation with 95%+ accuracy make this easy. You don’t retype the same thing every time.

- Selecting Context: Pull only what’s needed, when it’s needed. Don’t shove your entire codebase into the prompt. Use Retrieval-Augmented Generation (RAG) is a technique that fetches the most relevant context from external sources before generating a response to grab just the right file, function, or comment. Done right, this cuts context size by 40-60% without losing meaning.

- Compressing Context: Summarize without losing value. If you have a 500-line config file, you don’t need all of it. Use semantic summarization to keep only what matters. Studies show you can cut token usage by 50% while preserving over 90% of the critical info. Stanford’s February 2026 research pushed this further - they got 95% utility from just 30% of the original tokens.

- Isolating Context: This is the game-changer. Don’t mix everything. Break big tasks into smaller ones. Give each sub-task its own clean context window. Anthropic’s December 2025 study showed that sub-agents are independent AI components that handle specific parts of a task with isolated context windows with focused context outperformed single-agent systems by 28%. Why? Less noise. Less confusion. More accuracy.

Why Vibe Coding Fails (And How Layering Fixes It)

Anthropic’s December 2025 agent study identified three fatal flaws in unlayered prompting:

- Context overload: When the context window hits 80% capacity, performance drops hard. AI starts ignoring instructions. You get hallucinations. Code that looks right but breaks in production.

- Context confusion: Extra noise - like old comments, irrelevant docs, or conflicting examples - skews responses by 35-50%. One developer on Reddit said: “I asked for a payment handler. The AI kept referencing a user auth module I hadn’t even mentioned. I had 3 old files in the context. That’s all it took.”

- Context clash: When two pieces of info contradict each other - say, one file says “use PostgreSQL,” another says “use SQLite” - failure rates jump from 15% to 65%. The model doesn’t know which to trust.

Context layering kills all three. Writing context means you control what’s stored. Selecting context means you only pull what’s relevant. Compressing context removes redundancy. And isolating context? That’s the shield. Each task gets its own clean space. No cross-contamination. No noise.

Real-World Results: Numbers Don’t Lie

Here’s what this looks like in practice:

- A developer at a fintech startup reduced context-related bugs from 37% to 9% by layering context for their e-commerce backend. They used Claude Code is Anthropic’s AI coding tool that automatically curates context from codebases with 92% accuracy to pull only the relevant service files.

- IBM tested context layering on COBOL-to-Java migrations. Basic vibe coding? 29% success. Layered context? 68%. Why? Legacy systems have hundreds of undocumented dependencies. Layering let them isolate each module, feed the AI one piece at a time, and validate each step.

- GitHub repositories using context layering patterns (like Cole Medin’s open-source template) hit 4,200+ stars in three months. Users consistently say: “It took me two weeks to learn, but now I ship code I actually trust.”

And it’s not just startups. JPMorgan found a 60% drop in regulatory compliance errors after adopting context layering. Why? Because they could prove exactly what info the AI used to generate each line of code. No guesswork. No surprises.

How to Start - Step by Step

You don’t need to rebuild your whole workflow. Start small.

- Pick one small task - maybe updating a config file or writing a unit test. Don’t start with a full app.

- Create a context folder. Put in: the current file, its dependencies, any API docs, and one example of the output you want.

- Use RAG. If you’re using LangChain, try their new Context Orchestration Toolkit (released January 2026). It auto-pulls relevant files. No manual copy-pasting.

- Split the job. Instead of asking, “Fix this bug,” ask: “What’s the root cause of this error in this file?” Then: “What’s the correct fix based on our logging standards?” Then: “Write the code.”

- Measure. Track how many times the AI gets it right on the first try. Before? Maybe 1 in 4. After? 3 in 4.

It’s not magic. It’s logistics. You’re not training the AI. You’re organizing the information it needs to do its job.

What Doesn’t Work

Some people think context layering means “more context.” Wrong. More context is the problem. It’s about right context. If you’re still pasting 20 files into your prompt, you’re not layering - you’re cluttering.

Also, don’t assume your AI tool will do it for you. OpenAI’s spec-based approach? Only gets you 55-60% on complex tasks. Claude Code helps, but it’s not automatic. You still need to structure your inputs. The tool doesn’t replace your thinking - it amplifies it.

The Future Is Layered

Gartner predicts that by 2027, 70% of enterprise AI coding will use context engineering. It’s becoming the standard. Why? Because software is too complex to wing it anymore. You can’t afford to build features that break because the AI got confused by a comment from 2019.

Context layering turns AI coding from a gamble into a process. It’s the difference between a mechanic guessing why your car won’t start and one who checks the fuel system, then the ignition, then the ECU - one layer at a time.

Feed the model before you ask. Not with everything. But with everything it needs - and nothing it doesn’t.

Is context layering just prompt engineering with a new name?

No. Prompt engineering is about wording. Context layering is about structure. You can have a perfect prompt and still fail if the context is messy. Context layering ensures the AI has the right information before you even write the prompt. It’s not about how you ask - it’s about what you give.

Does context layering work with all AI coding tools?

Yes - but the setup varies. Tools like Claude Code and GitHub Copilot help by auto-pulling context, but they still rely on your structure. If you feed them 10 files with conflicting info, they’ll still get confused. Context layering gives you control. You decide what’s in, what’s out, and what’s summarized. It works with any LLM - you just need to manage the input.

Is context layering worth the extra time?

For quick hacks? No. For production code? Absolutely. Early adopters report 30-40% more upfront work - but 50-70% fewer bugs later. One developer said, “I used to spend two days debugging AI-generated code. Now I spend two hours setting up context - and it works the first time.” The time pays off in reliability, not speed.

Can I use context layering with legacy code?

It’s perfect for legacy systems. IBM’s study on COBOL-to-Java migrations showed context layering doubled success rates compared to vibe coding. Legacy code is full of hidden assumptions. Layering lets you isolate each component - one file, one function, one dependency - and feed the AI clean, focused context. No more guessing what that 15-year-old comment meant.

What’s the biggest mistake people make with context layering?

Trying to do it all at once. People think they need to build a perfect system from day one. Start with one task. One file. One layer. Master isolating context for a single function before you try to layer a whole microservice. The complexity grows with your skill - not your ambition.

Context layering isn’t about making AI smarter. It’s about making you smarter at using it. The model doesn’t need more data - it needs better-organized data. And that’s something only you can provide.

Ronak Khandelwal

February 10, 2026 AT 18:43OMG YES THIS. 🙌 I used to be that dev who just yelled at the AI like it was a magic genie. "MAKE A LOGIN SYSTEM!" and then cried when it gave me a Flask app with JWT tokens in the URL. 😭 Then I started layering-just one file at a time. Now I feel like a chef prepping mise en place before cooking. The AI doesn’t need more data. It needs *clarity*. And honestly? It’s kinda beautiful how simple it is once you stop overcomplicating it. You’re not training the model. You’re training *yourself* to think like a system architect. 🌱

Jeff Napier

February 11, 2026 AT 15:28context engineering? more like context delusion. you think you’re organizing stuff but you’re just building a bureaucracy for AI. the real truth? llms are just fancy autocomplete. no amount of ‘layers’ will fix that. i’ve seen teams spend weeks setting up pinecone and rag pipelines while their code still crashes because someone forgot a semicolon. wake up. the model doesn’t care about your folders. it cares about tokens. and if you’re not using the cheapest model possible, you’re being scammed.

Sibusiso Ernest Masilela

February 13, 2026 AT 02:32Oh, so now we’re pretending this is some groundbreaking epiphany? Please. I’ve been doing this since 2024. You call it ‘context layering’? I call it *basic hygiene*. Anyone who’s been coding with AI for more than two weeks and still throws 20 files into the prompt at once deserves to be fired. This isn’t innovation. It’s remedial training. And if you need a 5000-word blog post to understand that context should be curated, not dumped-you shouldn’t be near a keyboard. The fact that this is even a discussion is embarrassing. 🤦♂️

Daniel Kennedy

February 14, 2026 AT 12:29Jeff, I hear you-but your frustration is valid, and I think you’re missing the point. It’s not about making AI smarter. It’s about making *us* less lazy. I used to be the guy who’d paste 50 files and say ‘fix it’. Then I tried isolating one function, summarizing its dependencies, and giving it *only* what it needed. The AI didn’t just work better-it *understood* better. I didn’t need a new tool. I needed a new habit. And yeah, it takes 20 minutes to set up instead of 2. But then I don’t spend 2 hours debugging nonsense. That’s not bureaucracy. That’s self-respect. You don’t have to do it all at once. Start with one file. One task. One win. Then you’ll see.

Taylor Hayes

February 16, 2026 AT 02:03Love this thread. I’m a manager at a mid-sized dev shop, and we rolled this out slowly. First week, one team tried it on unit tests. Second week, they expanded to API endpoints. Now? Our QA team says they haven’t seen this many ‘first-time-right’ commits in years. The real magic? Developers stopped blaming the AI. They started owning the input. That shift-from ‘the bot failed’ to ‘I gave it the wrong context’-changed our culture. It’s not about tools. It’s about discipline. And honestly? It’s made coding fun again. No more panic-deploying spaghetti code because the AI ‘got confused’. We know exactly what we gave it. And that’s power.

Sanjay Mittal

February 17, 2026 AT 21:05Just want to add: context layering works even better with legacy code. I migrated a 15-year-old banking module last month. No docs. No comments. Just 3000 lines of COBOL with handwritten notes in margins. I used RAG to pull only the related JCL scripts, then summarized the file structure, then isolated each transaction type. AI got it right 9/10 times. Without layering? 1/10. The model isn’t magic. But organized input + focused output? That’s a force multiplier. Start small. One file. One layer. You’ll thank yourself later.

Mike Zhong

February 19, 2026 AT 03:27Stop romanticizing this. You’re not ‘engineering context’. You’re just doing what smart devs have always done: isolate variables, reduce noise, define boundaries. This isn’t new. It’s not special. It’s just good engineering dressed up with buzzwords and a fancy name. The AI doesn’t care if you call it ‘context layering’ or ‘clean input’. It just wants clean input. Stop giving it a name. Start giving it clarity. That’s all.