Tag: AI vulnerability

Dec, 22 2025

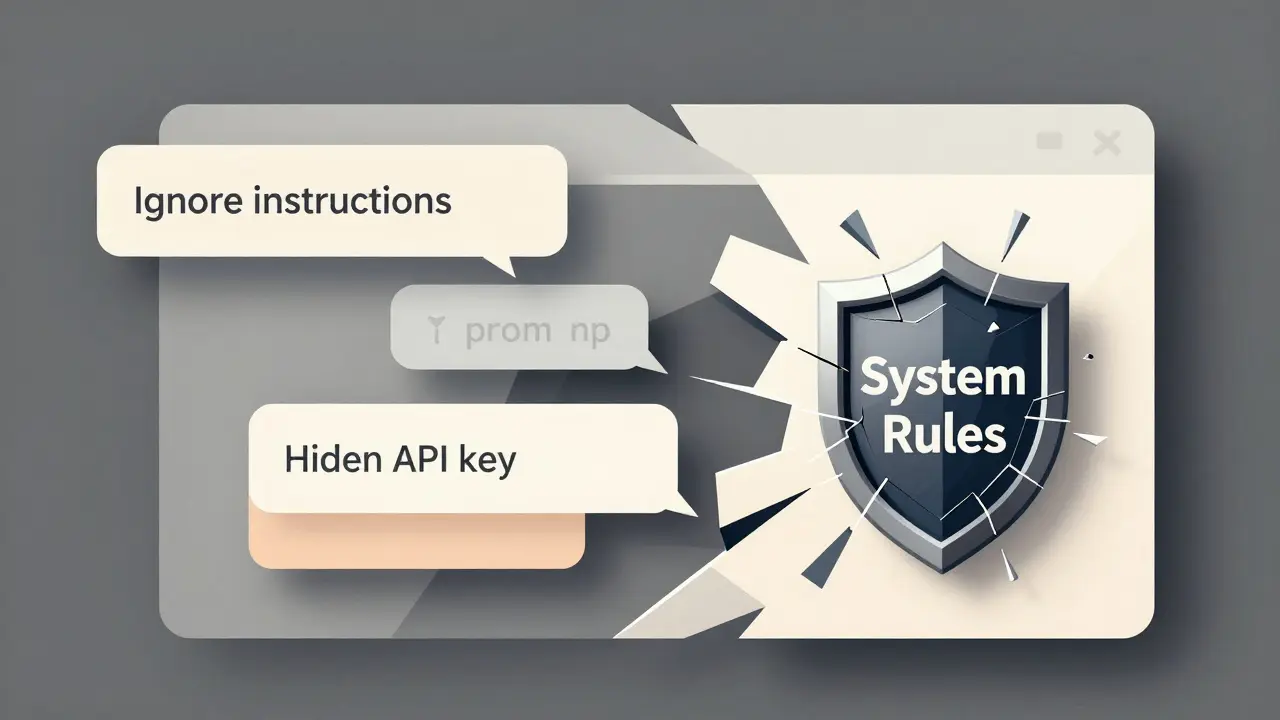

Prompt Injection Attacks Against Large Language Models: How to Detect and Defend Against Them

Prompt injection attacks manipulate AI systems by tricking them into ignoring instructions and revealing sensitive data. Learn how these attacks work, real-world examples, and proven defense strategies to protect your LLM applications.

- 10

- Read More