Training a single large generative AI model can use more electricity than some small towns consume in a year. GPT-3 took about 1,300 megawatt-hours to train. GPT-4? Roughly 65,000 megawatt-hours-50 times more. That’s not just expensive. It’s unsustainable. As AI models keep growing, so does their energy hunger. But there’s a way to shrink that footprint without sacrificing performance: sparsity, pruning, and low-rank methods.

Why Energy Efficiency in AI Training Matters

Most people think of AI as code and data. But behind every chatbot, image generator, or translation tool is a massive machine guzzling power. Training a model isn’t just about running code-it’s about heating up racks of GPUs for weeks. The MIT researchers behind a 2025 study found that nearly half the electricity used in training goes into squeezing out the last 2 or 3% of accuracy. That’s waste. And it’s avoidable.

Enter sparsity, pruning, and low-rank methods. These aren’t magic tricks. They’re mathematical strategies that strip away unnecessary parts of a neural network-like trimming a bush to let sunlight reach the core branches. The result? Models that use 30% to 80% less energy during training, with almost no drop in quality.

What Is Sparsity? Zero Weights, Big Savings

Neural networks are made of weights-numbers that tell the model how much attention to pay to each input. Most of these weights don’t need to be active. Sparsity means forcing many of them to become exactly zero.

There are two types: unstructured and structured. Unstructured sparsity zeros out individual weights anywhere in the matrix. It can hit 80-90% sparsity, but it’s hard for hardware to use efficiently. Structured sparsity zeros out entire chunks-like whole rows, columns, or filters. It’s easier for GPUs to handle. MobileBERT, for example, went from 110 million parameters down to 25 million using structured sparsity-and still hit 97% of its original accuracy on language tasks.

Why does this save energy? Because zero weights mean fewer calculations. Less math = less power. NVIDIA found that a 50% sparse model runs 2.8 times faster on their A100 GPUs. That’s not just energy savings-it’s faster training, lower cloud bills, and less heat.

Pruning: Cut the Fat, Keep the Function

Pruning is like removing unused tools from a mechanic’s toolbox. You start with a full model, then cut out the weights that contribute the least. There are three main ways:

- Magnitude-based pruning: Remove the smallest weights. Simple. Effective.

- Movement pruning: Watch how weights change during training and remove ones that don’t move much. More intelligent.

- Lottery ticket hypothesis: Find a small subnetwork inside the big model that can train just as well on its own. It’s like finding the one seed that grows into the whole plant.

University of Michigan tested iterative magnitude pruning on GPT-2. At 50% sparsity, they cut training energy by 42%-and accuracy dropped just 0.8%. That’s a win. Developers on TensorFlow’s GitHub reported similar results: pruning BERT-base cut energy from 213 kWh to 126 kWh, with only a 0.9% accuracy loss.

But here’s the catch: prune too hard. Go beyond 70% sparsity, and accuracy tanks fast. Dr. Lirong Liu from the University of Surrey warns that over-pruning can erase the energy savings you worked so hard for. It’s not about removing as much as possible. It’s about removing just enough.

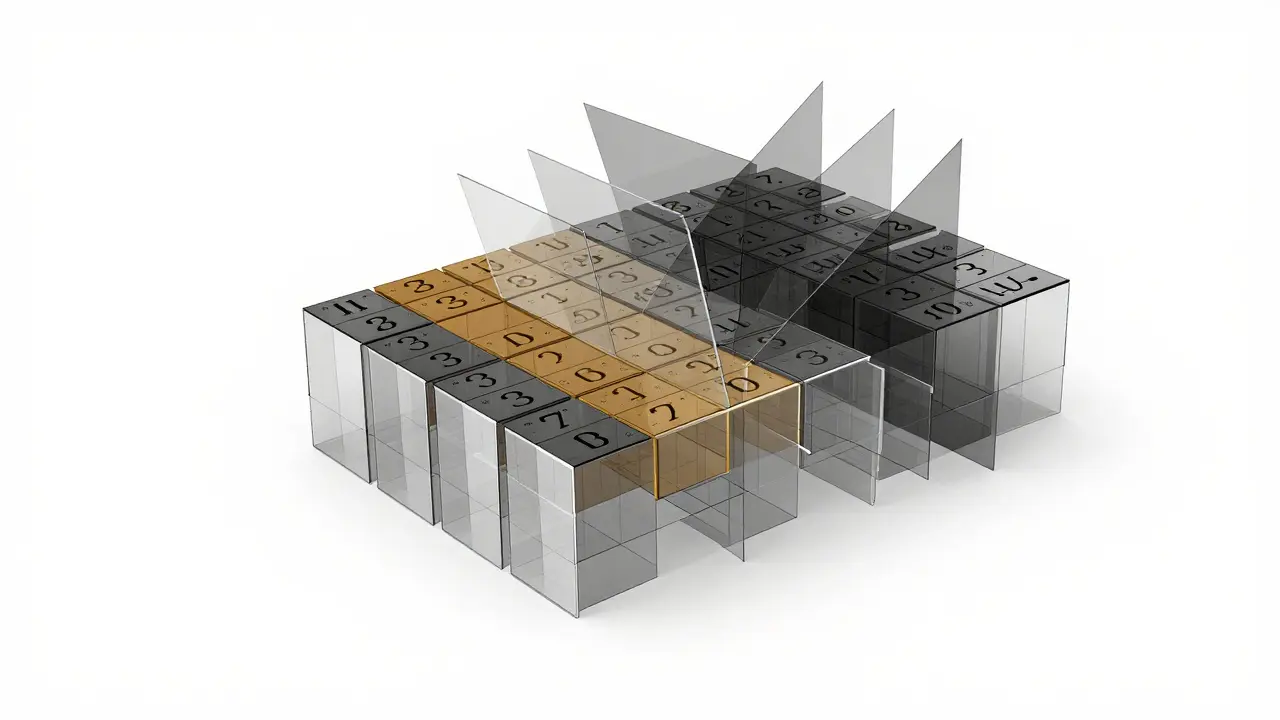

Low-Rank Methods: Compressing Matrices Like Origami

Imagine a giant spreadsheet with thousands of rows and columns. Low-rank methods say: “What if we could describe this whole sheet with just a few key patterns?”

They use math called matrix decomposition-like Singular Value Decomposition (SVD)-to break down huge weight matrices into smaller, simpler ones. Instead of storing one massive matrix, you store two or three smaller ones that, when multiplied, recreate the original.

NVIDIA used low-rank adaptation (LoRA) on BERT-base. The result? Training energy dropped from 187 kWh to 118 kWh-a 37% reduction. Accuracy? 99.2% of the original. That’s not a trade-off. That’s a upgrade.

Tucker decomposition and tensor train methods can squeeze models 3-4 times smaller. And because these compressed matrices are easier to compute, they speed up both training and inference. The beauty? You can apply low-rank methods on top of sparsity or pruning. Combine them, and you get even bigger savings.

How Do These Compare to Other Methods?

There are other ways to cut AI energy use-like mixed precision (using 16-bit numbers instead of 32-bit) or early stopping (shutting down training early). But they don’t hold up.

Mixed precision saves 15-20% energy. Good, but not enough. Early stopping saves 20-30%, but risks underperforming models. Sparsity and pruning? They save 40-60% and preserve accuracy. IBM’s October 2024 analysis showed that combining structured pruning with low-rank adaptation cut Llama-2-7B training energy by 63%. Mixed precision alone? Just 42%.

But they’re not perfect. They’re harder to implement than distillation (like making a smaller model from a bigger one). They need tuning. They add 5-15% to development time. A developer on Reddit said it took them three weeks just to get pruning working right. But once it did? Training costs dropped from $2,850 per run to $1,620.

Real-World Implementation: What It Takes

You can’t just flip a switch. These methods need careful setup. Here’s how most teams do it:

- Train a baseline model-get your accuracy baseline first.

- Apply sparsity or pruning gradually-don’t chop everything at once. Increase sparsity slowly during fine-tuning.

- Validate accuracy-check if performance holds on your test set.

- Optimize for deployment-some frameworks need special libraries to handle sparse models.

TensorFlow’s Model Optimization Toolkit and PyTorch’s TorchPruner now include built-in tools. But documentation varies. TensorFlow’s guides got 4.2/5 stars. PyTorch’s? 3.8/5. Community forums are full of people asking, “Why did my model crash after pruning?” The answer: load imbalance. When some GPUs get sparse work and others get full work, training slows down. That’s where tools like University of Michigan’s Perseus come in-they sync the workload across machines.

Enterprise teams estimate 3-5 person-weeks of effort to get this right. But the ROI? Faster training cycles. Lower cloud bills. And the ability to train bigger models on the same budget.

The Future: Mandatory Efficiency

This isn’t a niche trend. It’s becoming standard.

By 2027, Gartner predicts 90% of enterprise AI deployments will use at least one compression technique. AWS, Google, and NVIDIA are building it into their platforms. Google’s TPU v5p (expected 2025) will auto-configure sparsity. NVIDIA’s Blackwell Ultra chips (late 2025) will accelerate pruning during training.

Regulation is catching up. The European AI Act requires energy logging for large models by mid-2026. The World Economic Forum warns data centers could hit 1.2% of global carbon emissions by 2027-unless we act.

And the most promising part? These techniques don’t need new hardware. They work on today’s GPUs. You don’t have to wait for the next chip. You can start today.

Final Thoughts: Efficiency Is the New Scale

We used to think bigger models meant better AI. Now we know: smarter models do. Sparsity, pruning, and low-rank methods let you train models that are lean, fast, and efficient-not just massive.

They’re not about cutting corners. They’re about cutting waste. And in a world where AI’s energy use doubles every 100 days, that’s not optional. It’s essential.