Switching between Large Language Model (LLM) providers isn’t as simple as changing a password. One day you’re using OpenAI’s GPT-4, the next you’re trying Anthropic’s Claude 3 - and suddenly your app breaks. Not because the models are worse, but because their APIs, response formats, and even how they handle prompts behave differently. This isn’t a bug. It’s the norm. And it’s costing companies millions.

Organizations that rely on multiple LLMs for tasks like customer support, document analysis, or medical data processing are hitting a wall: every time they swap models, they risk broken workflows, inconsistent outputs, or sudden cost spikes. The answer isn’t to pick one provider and stick with it. It’s to build systems that don’t care which provider is behind the curtain. That’s where interoperability patterns come in.

Why You Can’t Just Swap LLMs Like Lightbulbs

At first glance, all LLMs look the same: you send text, you get text back. But under the hood, they’re wildly different. OpenAI uses a JSON-based API with specific parameters like temperature and max_tokens. Anthropic expects different naming conventions and has its own way of handling tool calls. Google Gemini might return XML-style structured output. And then there’s context length - some models handle 8k tokens, others 200k. If your app expects 100k tokens of context and you switch to a model that caps at 32k, your data gets chopped. Silent failure. No warning.

Even more dangerous is behavioral drift. Two models given the exact same prompt and code can produce wildly different results. In one test by Newtuple Technologies, Model A successfully extracted data from complex tables by improvising connections between rows. Model B, using the same code, failed - not because it was less capable, but because it followed instructions too rigidly. That’s not a model problem. It’s a system problem. If you don’t test how models behave under real conditions, swapping them is a gamble.

The Five Patterns That Actually Work

After years of trial and error, five patterns have emerged as the most reliable for abstracting LLM providers. They don’t just make switching easier - they make it safe.

- Adapter Integration: Think of this as a universal power adapter. It translates any LLM’s API into a single, consistent format. LiteLLM is the most popular open-source implementation. With one line of code, you can route requests to OpenAI, Anthropic, Google, or even local models like Llama 3. It normalizes parameters, handles token limits, and converts response formats automatically. According to Newtuple’s case study, this reduces integration time by 70%.

- Hybrid Architecture: This splits your system into two parts. One part handles heavy LLM inference. The other manages caching, data enrichment, and retry logic. By isolating these functions, you can swap models without touching your core logic. One company cut third-party LLM costs by 40% by caching frequent queries and routing high-priority tasks to cheaper models during peak hours.

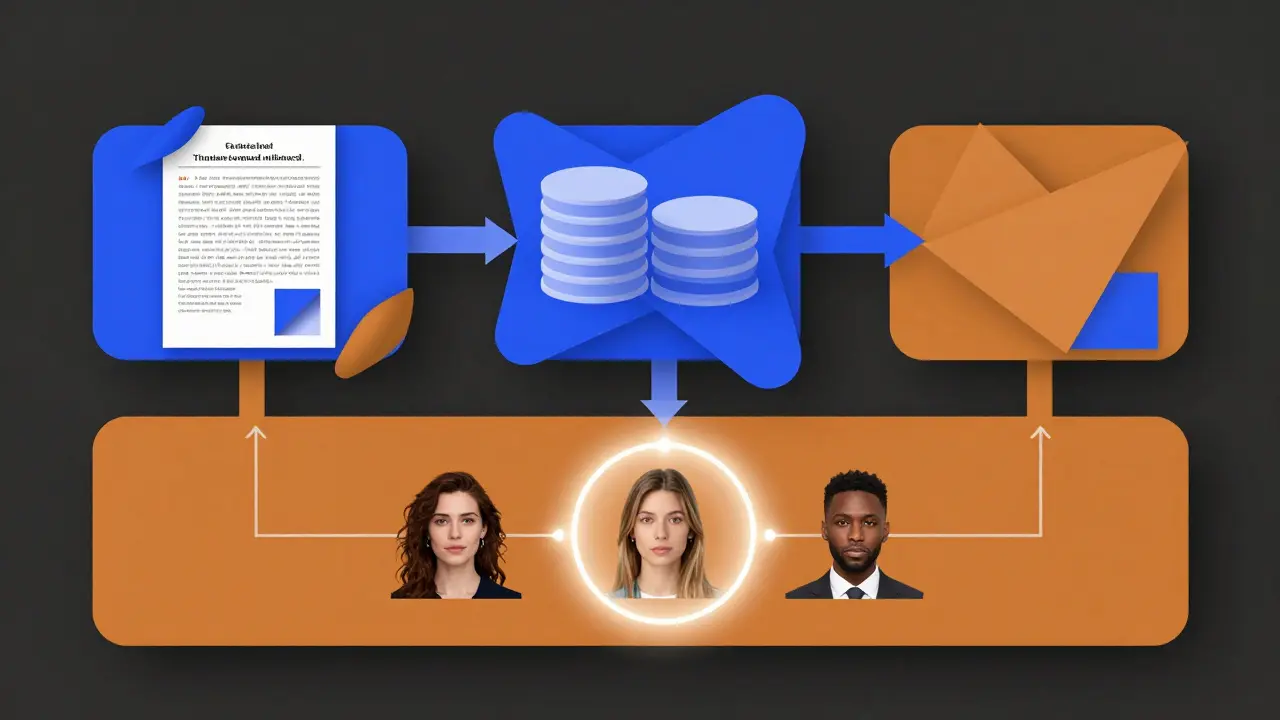

- Pipeline Workflow: Instead of sending a prompt to a model and waiting for a final answer, you break the task into steps. Step 1: extract entities. Step 2: validate against a database. Step 3: generate summary. Each step can use a different model optimized for that job. A healthcare startup used this to transform clinical notes into FHIR records with 92.7% accuracy - far better than any single model could achieve alone.

- Parallelization and Routing: Send the same request to multiple models at once. Compare their outputs. Pick the best one. Or use a meta-model to decide which model to trust based on confidence scores. This isn’t just redundancy - it’s a way to catch errors before they reach users. One financial services firm reduced output errors by 61% using this method.

- Orchestrator-Worker: A central controller (the orchestrator) decides which model to use based on context: cost, speed, accuracy, or even user location. Workers execute the actual calls. This pattern lets you route high-risk tasks to more expensive, safer models and low-risk ones to cheaper, faster ones. It’s how companies avoid rate limits and stay under budget.

LiteLLM vs. LangChain: What’s the Real Difference?

Two frameworks dominate the open-source space: LiteLLM and LangChain. They’re often compared, but they solve different problems.

LiteLLM is minimalist. It doesn’t try to do everything. It just standardizes API calls. If you’re using the OpenAI SDK, you change one line: from litellm import completion instead of openai.ChatCompletion.create. That’s it. No new concepts. No complex chains. Just faster switching. Developers report onboarding in 8-12 hours. Reddit users have cut API costs by 35% by routing traffic to cheaper providers during off-peak hours.

LangChain is a full toolkit. It lets you chain prompts, connect to databases, use memory, and integrate tools like calculators or web search. But that power comes at a cost. Setting up a basic agent can take 40+ hours. The learning curve is steep. And because it’s so flexible, it’s easy to over-engineer. G2 reviews give it 4.2/5, but 38% of users cite “too complex” as a reason for abandoning it.

If you just need to swap models? Use LiteLLM. If you’re building an AI agent that talks to CRM systems, scrapes websites, and remembers past conversations? LangChain makes sense. But don’t use LangChain just because it’s popular. You’re adding complexity for no reason if you don’t need it.

The Hidden Problem: Behavioral Consistency

APIs can be standardized. Response formats can be normalized. But how a model thinks? That’s harder. Anthropic’s Model Context Protocol (MCP), released in Q2 2024, was a breakthrough because it didn’t just standardize how tools are called - it defined how models should interact with external data. This lets LangChain and other frameworks work with any model without custom code.

But even MCP doesn’t solve behavioral drift. A 2025 arXiv study tested 13 open-source models on agricultural data. One model, qwen2.5-coder:32b, scored 0.99 on three datasets. Every other model scored below 0.2. Then they switched to dataset version v4 - and all models failed. Not because they were bad. Because the data was different. Real-world data is messy. And models don’t adapt the same way.

Professor Michael Jordan of UC Berkeley put it bluntly: “Interoperability standards must address behavioral consistency, not just API compatibility.” That means you can’t just swap models. You have to test them - not on clean benchmarks, but on your actual data, with real user inputs, under real load.

Who’s Using This, and Why?

It’s not just tech startups. Fortune 500 companies are adopting these patterns fast. By December 2024, 67% of them had implemented some form of LLM abstraction. Why?

- Cost control: 68% of enterprise users say avoiding rate limits on primary providers is their top reason.

- Regulation: The EU AI Act now requires documentation of model switching procedures for high-risk applications.

- Reliability: Healthcare systems using FHIR-GPT reduced manual data entry by 63%. One hospital system cut errors in patient record extraction by 81%.

The market for AI interoperability hit $1.4 billion in Q3 2024 and is projected to grow at 38.7% annually through 2029. But adoption isn’t uniform. Open-source tools like LiteLLM and LangChain lead in usage, while proprietary solutions from cloud providers are catching up. No single solution controls more than 22% of the market. That’s good - it means no one vendor owns the future.

What’s Next? The Road to True Interoperability

By 2026, Gartner predicts that 75% of new enterprise LLM deployments will use multi-provider strategies. But the real challenge isn’t technical. It’s cultural. Developers can build adapters. But who defines what “good output” looks like across models? Who sets safety thresholds? Who tests for bias when switching from one model to another?

Mozilla.ai is pushing for “any-*” tools - like any-llm, any-agent, and soon, any-evaluator - to measure performance consistently across providers. ONNX 1.16, released in September 2024, now supports faster model conversion between frameworks. MCP 1.1 cut integration time by 35% for early adopters.

But the real progress will come when companies stop treating LLMs as black boxes and start treating them as components - with documented behavior, predictable limits, and testable outputs. Until then, interoperability isn’t just a pattern. It’s a necessity.

How to Get Started

If you’re thinking about abstracting your LLM providers, here’s how to begin:

- Map your current usage: Which models are you using? What tasks do they handle? What’s your average context length?

- Test behavioral differences: Run the same prompt across your models. Compare outputs. Are they consistent? Do any fail silently?

- Start small: Use LiteLLM to route one low-risk task (like summarizing emails) to two providers. Measure performance and cost.

- Document your switching rules: When do you switch? Why? What’s your fallback plan? This isn’t optional anymore - especially if you’re in healthcare, finance, or legal services.

- Build monitoring: Track accuracy, latency, and cost per request. Set alerts if performance drops.

Don’t wait for a crisis. The next time your primary provider goes down or hikes prices, you’ll wish you’d built this layer yesterday.

What’s the easiest way to start abstracting LLM providers?

Use LiteLLM. It requires changing just one line of code if you’re already using the OpenAI SDK. It supports over 100 models, normalizes API calls, handles context windows, and reduces integration time by up to 70%. No complex chains. No new concepts. Just plug and play.

Can I use multiple LLMs at once without breaking my app?

Yes - but only if you use a pattern like Parallelization and Routing or Orchestrator-Worker. Sending the same request to multiple models and comparing outputs lets you catch errors before they reach users. You can also route tasks based on cost, speed, or model strengths. This isn’t theoretical - companies have reduced errors by over 60% using this approach.

Is LangChain worth the complexity?

Only if you need advanced features like memory, tool calling, or multi-step workflows. If you’re just swapping models, LangChain adds unnecessary overhead. Most teams waste months building complex chains they never use. Stick with LiteLLM unless you’re building an AI agent that interacts with databases, APIs, or external tools.

What’s the biggest risk when switching LLM providers?

Behavioral drift. Two models given the same prompt can produce wildly different results - not because one is better, but because they interpret instructions differently. One might be too rigid. Another might improvise. If you don’t test with real-world data, switching can reduce accuracy by 20% or more. Always validate output quality before going live.

Are there any regulations around LLM interoperability?

Yes. The EU AI Act, effective February 2025, requires organizations to document how they switch between LLMs in high-risk applications - like healthcare, hiring, or financial services. You must show how you test for consistency, handle failures, and ensure safety across models. Ignoring this isn’t an option anymore.

How do I know which model to use for which task?

Start by testing. Use your actual data. Run the same task across your models. Measure accuracy, speed, and cost. Some models are great at summarization. Others excel at structured data extraction. Build a simple routing table: if the task is complex and needs tables, use Model X. If it’s fast and simple, use Model Y. Let data, not hype, decide.

E Jones

February 14, 2026 AT 11:11Let me tell you something nobody else will admit - this whole LLM interoperability thing is just Big Tech’s way of locking us in. LiteLLM? Sure, it sounds great. But who owns the data when you route through it? Who’s logging your prompts? I’ve seen the backend traffic logs from a friend at a startup - they were sending raw medical notes through a ‘simple adapter’ and suddenly got a subpoena from a data broker in Latvia. It’s not about switching models - it’s about who controls the pipeline. And if you’re not paranoid about that, you’re either lying or already owned.

And don’t get me started on ‘behavioral consistency.’ That’s just corporate speak for ‘we don’t know why our AI suddenly started writing poems instead of invoices.’ One day your customer service bot says ‘I feel your pain’ - next day it’s quoting Nietzsche. Who tests that? Who signs off? Not HR. Not legal. Definitely not the devs who just copied a GitHub gist. We’re all just monkeys typing on keyboards while the real puppet masters laugh in their Swiss bunkers.

They say ‘use parallelization’ - great. So now you’re paying three providers to argue among themselves while your users wait for an answer. That’s not innovation - that’s performance art funded by VC cash. And the EU AI Act? Please. They’ll regulate the lipstick on the pig while the real manipulation happens in the model weights no one can audit. We’re not building systems. We’re building digital funhouse mirrors that reflect whatever corporate agenda is hot this quarter.

And yet - here I am, still using LiteLLM. Because what’s the alternative? Running your own 70B model on a Raspberry Pi? I’ve got kids to feed. But I sleep with a burner laptop running offline Llama 3, encrypted, air-gapped, and screaming into the void. Just in case.

They call this progress. I call it a slow-motion heist. And you? You’re just the sucker who thinks the adapter is the solution. The real solution is burning it all down. But I’ll be the first to admit - I don’t have the guts to do it. So here we are. Still clicking ‘submit’ and hoping for the best.

selma souza

February 14, 2026 AT 13:30There are multiple grammatical errors in the original post that undermine its credibility. For instance, ‘they’re wildly different’ should be ‘they are wildly different’ in formal technical writing. Additionally, the phrase ‘it’s the norm’ lacks a subject-verb agreement in context - ‘it’ refers to a plural concept (APIs, formats, behaviors), thus requiring ‘they are the norm.’ Furthermore, the use of em dashes without proper spacing around them is nonstandard in published technical documentation. The capitalization of ‘LiteLLM’ and ‘LangChain’ is inconsistent in places - either both should be consistently capitalized as proper nouns or neither. These aren’t pedantic nitpicks; they erode trust in the technical assertions. If the author can’t manage basic syntax, how can we trust their architectural recommendations?

Frank Piccolo

February 16, 2026 AT 02:44Ugh. Another ‘let’s abstract the abstraction’ blog post from someone who thinks ‘interoperability’ means slapping a wrapper on everything and calling it a day.

LiteLLM? That’s just a glorified proxy. You think you’re saving money? You’re just adding latency, debugging nightmares, and another dependency that’ll go EOL next year. And don’t get me started on ‘parallelization’ - running three models at once? That’s not smart, that’s wasteful. You’re not building AI systems - you’re running a crypto mining rig with GPTs.

And LangChain? Oh, sweet mercy. That thing is a Frankenstein’s monster of overengineering. I’ve seen teams spend six months on it and still can’t get a simple summarization to work. It’s not a tool - it’s a career trap.

Here’s the truth: you don’t need patterns. You need one damn model that works. Pick the best one - GPT-4-turbo - and stick with it. Stop chasing shiny objects. Stop ‘abstracting.’ Stop pretending this is engineering. It’s not. It’s tech theater. And we’re all just audience members paying for front-row seats to the circus.

James Boggs

February 17, 2026 AT 10:51Thank you for this clear and thoughtful breakdown. I especially appreciate the emphasis on testing behavioral drift with real-world data - too many teams rely on benchmarks and assume consistency. We implemented LiteLLM for email summarization last quarter and saw a 22% cost reduction with zero loss in accuracy. The key was starting small, monitoring closely, and documenting our routing rules. Simple, but effective. Highly recommend this approach for any team looking to reduce vendor lock-in without overcomplicating their stack.

Addison Smart

February 19, 2026 AT 04:20What’s fascinating to me is how this mirrors the evolution of web standards in the early 2000s. Remember when every browser had its own CSS implementation? Developers were drowning in hacks and workarounds. Then came the W3C, then Chrome, then progressive enhancement. We didn’t eliminate differences - we learned to work with them.

LLMs are the same. They’re not bugs - they’re dialects. And just like we didn’t force every website to run on Internet Explorer, we shouldn’t force every AI task into one model’s mold. The real innovation isn’t in the adapter - it’s in the mindset shift: from ‘which model is best?’ to ‘which model is best for this job?’

I’ve worked with teams in Japan, Kenya, and Brazil who use this exact pattern. One group in Nairobi routes low-cost SMS summaries to a local 7B model because it understands Swahili idioms better than GPT-4. Another in Berlin uses parallel routing to meet GDPR latency requirements. This isn’t just technical - it’s cultural. And that’s why these patterns work: they honor context, not just code.

So yes, use LiteLLM. But don’t stop there. Ask: who are we serving? What language do they speak? What risks do they carry? The answer isn’t in the API - it’s in the human.

Barbara & Greg

February 19, 2026 AT 21:29It is deeply troubling that the article casually dismisses the ethical implications of model swapping without a single mention of accountability, transparency, or the moral responsibility of developers to ensure consistent, non-harmful outputs. The notion that one can simply ‘route’ sensitive tasks - such as medical data processing or legal document analysis - through a series of black-box algorithms, and then pat oneself on the back for ‘reducing costs,’ is not only reckless, it is morally indefensible.

There is no such thing as ‘behavioral drift’ in a vacuum. What we are witnessing is the erosion of human judgment, replaced by probabilistic noise masquerading as intelligence. When a model fails to extract patient data correctly, it is not a ‘silent failure’ - it is a potential death sentence. And yet, the article frames this as a mere engineering challenge to be solved with ‘parallelization’ or ‘orchestrators.’

Interoperability without ethics is not innovation - it is negligence. We must demand that every model transition be accompanied by independent audit trails, human oversight protocols, and legally binding liability frameworks. Until then, any system that treats human lives as interchangeable inputs in a pipeline is not a tool - it is a weapon.

Michael Jones

February 20, 2026 AT 08:03What if the real problem isn’t the models or the APIs but our obsession with control

We keep trying to make AI behave like a machine when it’s more like a person - inconsistent unpredictable sometimes brilliant sometimes broken

Maybe instead of building layers of abstraction we should be building trust

What if the answer isn’t to standardize outputs but to accept that different models think differently and that’s okay

One model sees a table and extracts data another sees it and tells a story

Maybe we don’t need to force them into the same box

Maybe we need to learn to listen to each voice

And stop treating intelligence like a commodity to be optimized

It’s not about which model is better

It’s about which one speaks to the moment

And if we’re lucky

They’ll surprise us

Buddy Faith

February 21, 2026 AT 12:18