When building custom language models, supervised fine-tuning (SFT) is the key step that transforms a general-purpose AI into a specialized tool. You don’t need to train a model from scratch to make it answer medical questions or handle customer support. SFT uses labeled examples to adapt existing large language models (LLMs) efficiently. Here’s how to do it right.

Supervised Fine-Tuning (SFT) A technique to adapt pre-trained large language models using labeled examples, enabling them to follow instructions and perform specific tasks without retraining from scratch.Step 1: Choosing Your Base Model and Dataset

Start with a solid foundation. For most practitioners, Meta’s LLaMA-3 8B (4-bit quantized) is a great choice-it fits on a single consumer GPU with 12GB VRAM. Google’s text-bison@002 works well for cloud-based setups via Vertex AI. The dataset needs at least 500 high-quality examples, but 5,000+ is ideal for complex tasks. Medical question answering, for example, requires physician-verified Q&A pairs. Retail customer service needs real chat logs with accurate responses. Avoid generic web-scraped data; quality beats quantity every time. As one ML engineer on Reddit put it: "500 expert-labeled examples beat 10,000 messy ones for legal contract analysis."Step 2: Preparing High-Quality Data

Data formatting is where most projects fail. Use consistent prompt templates. A YouTube tutorial by ML engineer Alvaro Cintas showed varying instruction formats reduced model accuracy by 18%. For medical Q&A, structure inputs like: "Step 3: Setting Up Hyperparameters

Use Hugging Face’s Transformers library with TRL (Transformer Reinforcement Learning). For hyperparameters:- Learning rate: 2e-5 to 5e-5 (never above 3e-5 to avoid catastrophic forgetting)

- Training epochs: 1-3 (more than 3 risks losing general knowledge)

- Batch size: 4-32 based on GPU memory

- Gradient accumulation steps: 4-8 for small batches

Step 4: Common Pitfalls and How to Avoid Them

Catastrophic forgetting happens when models lose general knowledge after fine-tuning. GitHub issue #12432 on TRL shows this occurs when learning rates exceed 3e-5. Fix it by reducing the rate and adding validation checks. Data quality issues are another big problem. JPMorgan Chase reported 28% hallucination rates in financial advice despite extensive SFT-requiring extra guardrails. Always test with a small subset first. If the model generates nonsense on 10% of test examples, revisit your data.Step 5: Evaluating Your Fine-Tuned Model

Don’t just rely on perplexity scores. Track task-specific accuracy (e.g., 89% on MedQA for medical Q&A models). Use human evaluation for coherence and safety. Anthropic’s Constitutional AI paper recommends scoring responses on helpfulness, honesty, and harmlessness. For real-world impact, measure time savings: Walmart Labs cut customer service response time by 63% after SFT on 12,000 retail examples. Always compare against the base model-no improvement means rework your data or settings.

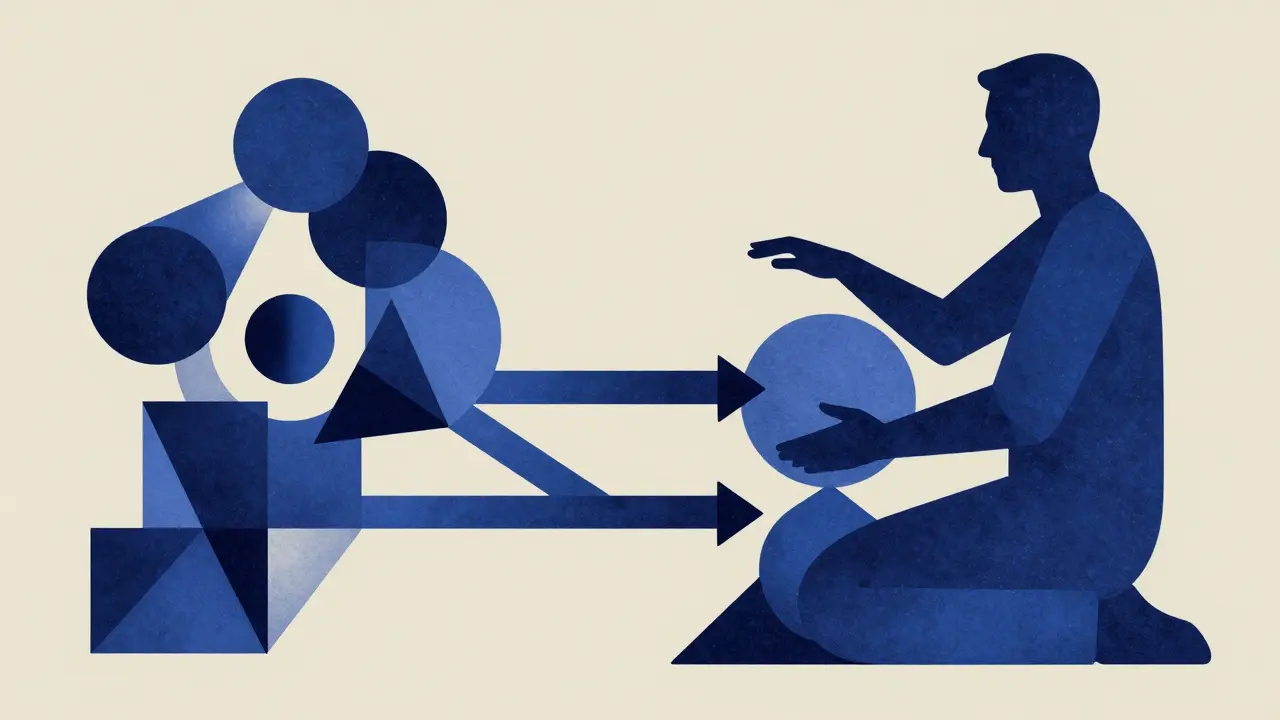

Step 6: Combining SFT with RLHF for Better Alignment

SFT alone can’t optimize for complex goals like "helpfulness" or "harmlessness." OpenAI’s InstructGPT achieved 85% human preference alignment using SFT followed by RLHF, versus 68% with SFT alone. RLHF uses human feedback to refine SFT outputs. Start with SFT, then collect preference data (e.g., "Response A is better than Response B") and train a reward model. This two-stage approach is industry standard for production-grade assistants.| Method | Resource Requirements | Best Use Cases | Limitations |

|---|---|---|---|

| Supervised Fine-Tuning (SFT) | Low to moderate (0.1-1% of pre-training cost) | Instruction-following, domain-specific tasks | Dependent on high-quality data; can't optimize complex objectives |

| Full Parameter Fine-Tuning | Very high (requires full model retraining) | When maximum performance is critical | High memory usage; risk of catastrophic forgetting |

| Prompt Engineering | None (no training needed) | Quick prototyping, simple tasks | Limited to model's existing knowledge; no improvement on complex tasks |

| Reinforcement Learning from Human Feedback (RLHF) | High (requires SFT first) | Aligning with human preferences, multi-dimensional goals | Complex implementation; needs human feedback data |

Real-World Successes and Failures

A medical startup fine-tuned LLaMA-2 7B on 2,400 physician-verified Q&A pairs. After 72 hours of training, it hit 89% accuracy on MedQA-up from 42% for the base model. Meanwhile, Walmart Labs reduced customer service response time by 63% using SFT on 12,000 retail examples. But JPMorgan Chase struggled: despite extensive SFT, their financial advice model had 28% hallucination rates. The fix? Adding explicit safety guardrails and human-in-the-loop reviews. LoRA A parameter-efficient fine-tuning method that modifies only 0.1-1% of model parameters using low-rank matrices. Hugging Face Transformers Open-source library for NLP tasks, including SFT training with SFTTrainer. Reinforcement Learning from Human Feedback (RLHF) A post-SFT technique that uses human feedback to optimize model behavior for complex objectives.Current Trends and Challenges

Google’s April 2024 Vertex AI update automatically rejects low-quality data during training, cutting curation time by 65%. Hugging Face’s TRL v0.8 (June 2024) uses "dynamic difficulty adjustment" to sequence examples from simple to complex, boosting accuracy by 12-18%. But challenges remain: EU AI Act requires "demonstrable oversight of all training data" for high-risk applications, and expert annotation costs may become a bottleneck beyond 2027. Still, 68% of enterprises now use SFT for customization-up from 22% in 2023.What’s the minimum data size for supervised fine-tuning?

Google’s Vertex AI documentation recommends at least 500-1,000 high-quality examples, but Stanford CRFM found optimal results need 10,000-50,000 for complex tasks. Quality matters more than quantity-500 expert-labeled examples often outperform 10,000 messy ones.

How does LoRA reduce memory usage during fine-tuning?

LoRA modifies only 0.1-1% of model parameters using low-rank matrices. For a 7B model, this drops memory from 14GB to 0.5GB, making it feasible on consumer GPUs. Microsoft Research confirmed this approach achieves 95-98% of full fine-tuning performance.

Why is data formatting critical in SFT?

Inconsistent prompt templates cause model confusion. A YouTube tutorial by ML engineer Alvaro Cintas showed varying instruction formats reduced accuracy by 18%. Consistency is key-use the same structure for all inputs, like "

What’s the difference between SFT and RLHF?

SFT uses labeled examples to teach specific tasks, while RLHF builds on SFT by using human feedback to optimize for subjective qualities like helpfulness. OpenAI’s InstructGPT achieved 85% human preference alignment with both stages versus 68% with SFT alone.

How do you avoid catastrophic forgetting after SFT?

Use low learning rates (2e-5 to 5e-5), limit training to 1-3 epochs, and validate with a separate test set. Overly high learning rates (>3e-5) often cause the model to forget general knowledge, as seen in GitHub issue #12432 on TRL.

Is SFT enough for all use cases?

No. For tasks requiring nuanced judgment (like medical advice), SFT alone may not suffice. JPMorgan Chase reported 28% hallucination rates in financial advice despite SFT, requiring additional guardrails. Always combine SFT with RLHF for complex alignment goals.

Angelina Jefary

February 4, 2026 AT 22:34SFT is a front for government surveillance. They're using medical data to track citizens.

Also, 'choice-it fits' should be 'choice. It fits' – punctuation matters. Grammar Nazi here.

Jennifer Kaiser

February 6, 2026 AT 21:55It's interesting how SFT bridges the gap between general AI and specialized tasks.

But we should consider the ethical implications of using it for customer support – does it dehumanize interactions?

Also, data quality is crucial. 500 expert-labeled examples often outperform 10,000 messy ones.

This is something we all need to reflect on.

TIARA SUKMA UTAMA

February 7, 2026 AT 15:31Data quality over quantity. Simple.

Jasmine Oey

February 7, 2026 AT 19:26Oh my gosh, this guide is way too basic! RLHF is the real deal. SFT alone is useless for real-world apps.

You need 10k+ examples. But hey, it's a start! <3

Marissa Martin

February 9, 2026 AT 12:19I don't know why people think SFT is important.

It's just a band-aid solution. Without ethics, it's just creating more problems.

But I guess most people don't care.