Tag: quantization

Feb, 6 2026

LLM Compression vs Model Switching: A Practical Guide for 2026

Learn when to compress large language models versus switching to smaller ones for optimal performance and cost. Discover real-world examples, benchmarks, and expert tips for deploying efficient AI systems in 2026.

Jan, 31 2026

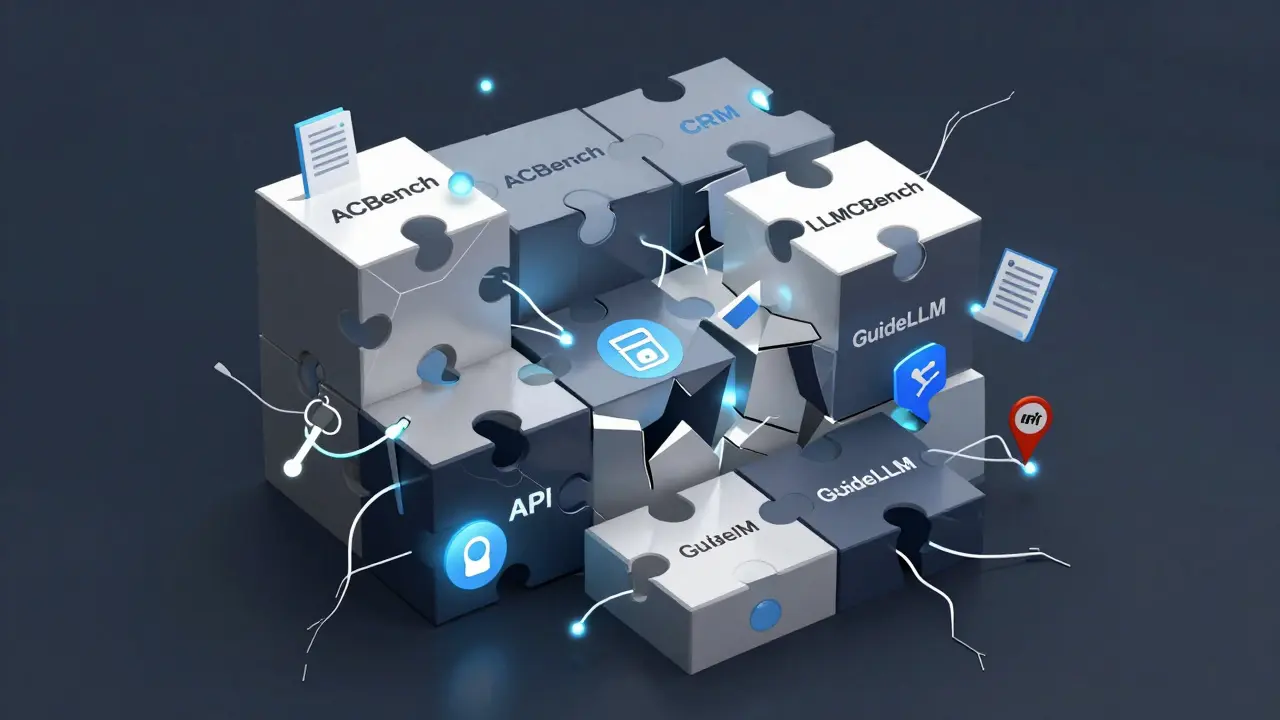

Benchmarking Compressed LLMs on Real-World Tasks: A Practical Guide

Learn how to properly benchmark compressed LLMs using ACBench, LLMCBench, and GuideLLM to avoid deployment failures. Real-world performance matters more than size or speed.