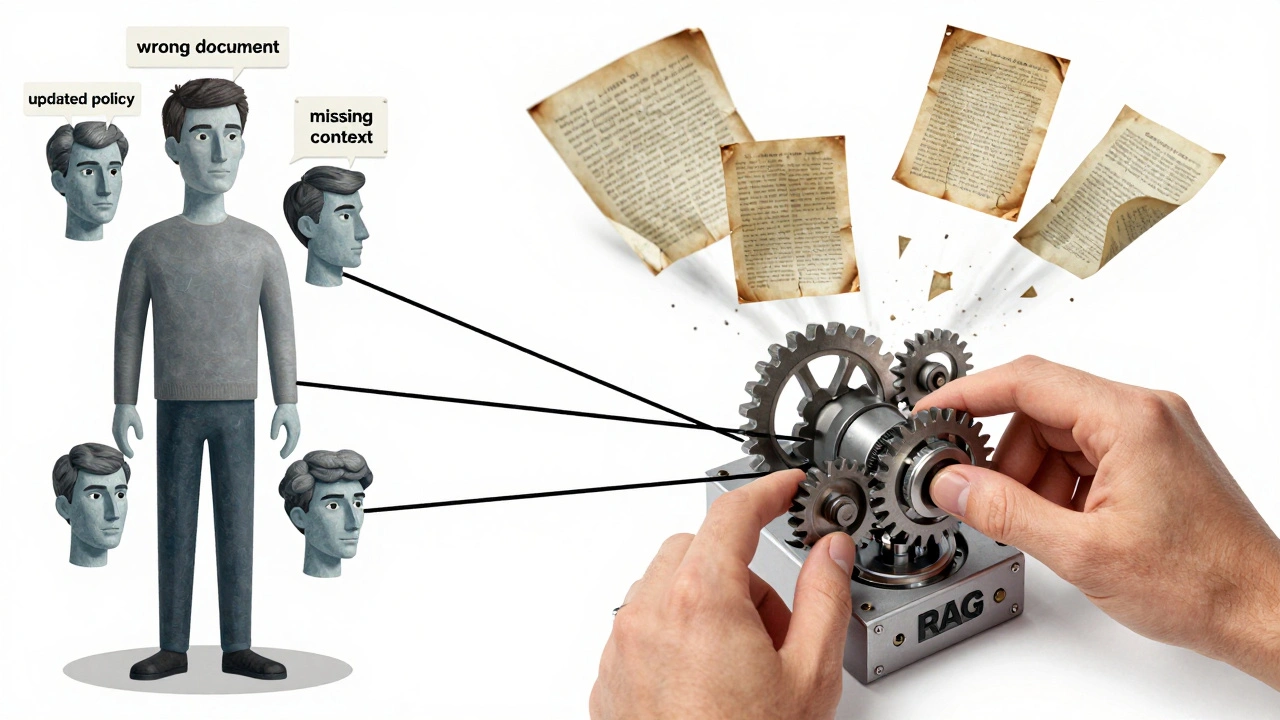

Most RAG systems start strong but fade over time. You train them with clean data, test them on perfect benchmarks, and they deliver great answers. Then real users start asking questions-messy, unexpected, evolving-and suddenly the system gives half-right answers, pulls outdated docs, or misses context entirely. The problem isn’t the model. It’s that RAG was built like a library bookshelf: static, unchanging, and blind to what users actually need. Human feedback loops fix that. They turn RAG from a one-time setup into a living system that learns from every interaction.

Why RAG Breaks in the Real World

RAG systems retrieve documents based on semantic similarity. If a user asks, "What’s the refund policy for defective headphones bought last month?", the system scans its knowledge base for anything about refunds or headphones. But semantic search doesn’t understand nuance. It doesn’t know that "defective" matters more than "broken," or that "last month" means you need the policy updated in June, not January. Without feedback, it keeps retrieving the same old pages-even if they’re wrong. A 2024 analysis from Label Studio found that 67% of RAG failures happen because the system pulls irrelevant or outdated context. That’s not a model issue. That’s a retrieval issue. And the only way to fix retrieval is to let humans tell it what’s wrong-and what would’ve been better.How Human Feedback Loops Actually Work

Human feedback loops in RAG aren’t just about clicking "this answer was helpful." They’re structured, repeatable systems that capture why something failed and how to improve it. The Pistis-RAG framework, developed by Crossing Minds, shows how it’s done. Here’s the process:- Query happens: A user asks a question. The RAG system retrieves documents and generates an answer.

- Feedback is collected: A human reviewer-maybe a support agent, domain expert, or trained annotator-sees the query, the retrieved documents, and the answer. They mark: Was the answer correct? Were the right documents pulled? What was missing?

- Feedback is turned into signals: Instead of just saying "wrong," the reviewer answers: "The policy document from 2023 was retrieved, but the 2025 update was ignored. The answer should have mentioned the 30-day window for defective items."

- The system learns: These labeled examples train a ranking model to prioritize documents that match real-world needs, not just keyword overlap. Over time, the system starts pulling the right docs even without direct feedback.

What Makes Human Feedback Better Than Automation

You might think: Why not just use automated metrics like Ragas or DeepEval? They score relevance, coherence, factual accuracy. But they’re blind to what users actually care about. A system might score 0.92 on Ragas because the answer is grammatically perfect and cites three sources. But if all three sources are from 2021 and the user needs 2025 rules, it’s still wrong. Humans spot that. Automated tools can’t. A 2024 study showed human feedback loops reduce false positives-cases where the system thinks it’s wrong but isn’t-by 42% compared to pure metric-based systems. That means fewer unnecessary retraining cycles and less noise in the data. Even RLHF, the method used to align large language models, takes longer. Cross Minds’ internal tests showed RAG feedback loops reach optimal performance 18.3% faster than RLHF because they target retrieval, not generation. Fix the context, and the answer fixes itself.

Real Results: Where This Actually Matters

This isn’t theory. Companies are seeing real impact:- A Fortune 500 financial firm used Label Studio’s feedback system and cut customer complaints about incorrect policy answers by 23.7% in six months.

- A healthcare provider implemented structured reviews where medical staff rated RAG responses. Each review took 47 seconds on average. Result? A 31.4% drop in clinically inaccurate answers.

- An e-commerce platform saw its return reason classification accuracy jump from 68% to 89% after adding feedback loops to handle vague queries like "I didn’t get what I expected."

Where It Falls Short

Human feedback loops aren’t magic. They come with trade-offs.- Complexity: Setting up the feedback pipeline takes 35% more engineering effort than a basic RAG system, according to Braintrust’s 2025 survey.

- Feedback fatigue: If reviewers are asked to label 50 answers a day, quality drops. Google Cloud recommends "opinionated tiger teams"-small groups of 3-5 people who match real user profiles (e.g., a tech-savvy customer rep + a non-technical manager).

- Bias risk: If feedback only comes from one group-say, younger users or English speakers-the system learns their biases. Stanford’s Dr. Emily Zhang warns that unmitigated feedback can amplify demographic bias by up to 22%.

- Regulatory hurdles: In healthcare and finance, every feedback input must be logged, auditable, and compliant. The EU’s 2025 AI Act now requires documented human oversight for high-risk RAG uses, making feedback loops not just useful but mandatory in some cases.

Getting Started: What You Need

You don’t need a PhD to start. Here’s what works:- Pick your feedback tool: Label Studio, Confident AI, and Pistis-RAG are the most mature. Label Studio’s open-source platform lets you build workflows without code.

- Define the review package: For each feedback entry, reviewers must see: the original query, the model’s answer, the top 3 retrieved documents, and the automated confidence score.

- Start small: Pick one high-impact use case-like customer support FAQs or product documentation. Don’t try to fix everything at once.

- Set up a feedback cadence: Review 10-20 responses daily. Use automated tagging to flag low-confidence answers for human review.

- Track progress: Monitor metrics like retrieval precision (should hit 0.85+), answer accuracy, and user satisfaction scores over time.

The Future: Where This Is Headed

By 2027, Gartner predicts 75% of enterprise RAG systems will use human feedback loops. That’s up from 28% in late 2025. New developments are making it easier:- Google Cloud’s December 2025 update to Vertex AI cuts feedback latency to under 150ms-fast enough for real-time adjustment.

- Label Studio’s November 2025 release auto-categorizes feedback, cutting review time by 38%.

- Pistis-RAG 2.0 (Q2 2026) will support multimodal feedback: users can highlight text in documents or annotate images alongside text.

- Open-source RAGBench is building standardized evaluation protocols, so feedback quality can be measured consistently across tools.

Is This Right for You?

Ask yourself:- Do your users ask questions that change over time? (Yes → you need feedback.)

- Are your support teams spending hours correcting RAG answers? (Yes → feedback saves labor.)

- Is your data updated monthly or quarterly? (Yes → feedback bridges the gap.)

- Do you operate in finance, healthcare, or legal? (Yes → feedback is now required by regulation.)

How often should human feedback be collected in a RAG system?

Start with daily reviews of 10-20 low-confidence responses. Once the system stabilizes, shift to weekly reviews of edge cases. The goal isn’t to review everything-it’s to catch patterns. If the same type of query keeps failing, that’s your next training batch.

Can I use customer feedback instead of expert reviewers?

You can, but with caution. Customer feedback is noisy. A user might say "wrong" because they didn’t understand the answer, not because the system failed. Use customer feedback to flag issues, but validate with domain experts. The best approach combines both: let users report problems, then have experts label why they happened.

Do I need to retrain the whole model every time I get feedback?

No. Feedback loops only retrain the retrieval ranking model-not the LLM. This is a key advantage. You’re fine-tuning what documents to pull, not rewriting how the model generates text. That means faster updates, lower compute costs, and no need for massive retraining cycles.

What’s the minimum team size needed to run a feedback loop?

You can start with one dedicated reviewer and one engineer. But for sustainability, aim for a team of 3-5 reviewers who rotate weekly. This prevents burnout and brings different perspectives. At larger companies, feedback reviewers are often customer support leads or product specialists-not data scientists.

How do I know if my feedback loop is working?

Track three metrics: retrieval precision (should rise above 0.85), answer accuracy (measured by expert audits), and user satisfaction (via post-answer surveys). If retrieval precision increases by 5-10% over 60 days and user complaints drop, you’re on track. If not, check your feedback quality-were reviewers giving specific reasons, or just "good/bad"?

Are there free tools to build a human feedback loop for RAG?

Yes. Label Studio is open-source and supports custom RAG feedback workflows. You’ll need to set up your own vector database (like Chroma or Qdrant) and connect it to your LLM. It’s not plug-and-play, but it’s free. For teams with limited engineering resources, Confident AI and Braintrust offer trial versions with guided setup.

Can human feedback loops work with multilingual RAG systems?

Absolutely. The Pistis-RAG framework was tested on both English (MMLU) and Chinese (C-EVAL) datasets, showing gains in both. The key is having reviewers who are fluent in each language. Don’t translate feedback-collect it natively. A Chinese reviewer should label Chinese queries. This preserves cultural and linguistic nuance that translation loses.