Ever wonder why your AI assistant sometimes makes up facts, even when it sounds convincing? That’s not a bug-it’s a feature of how these models generate text. The real culprit behind many hallucinations isn’t the training data alone. It’s what happens during generation: the sampling choices that decide which words come next. And if you’re using an LLM in production, tweaking these settings might be the single most effective way to cut hallucinations without retraining your model.

What Exactly Is a Hallucination?

A hallucination in an LLM isn’t a glitch like a crashed app. It’s when the model confidently generates something that’s false, unsupported by context, or outright nonsense. Think of it like a student who didn’t study but guesses answers anyway. The model isn’t lying-it’s just bad at saying "I don’t know." According to research from OpenAI and others, training encourages models to guess rather than admit uncertainty. That’s baked into the loss function. So when you ask a question with ambiguous context, the model doesn’t pause. It picks a word. Then another. And another. Each choice builds on the last. And if the sampling method is too permissive? You get a convincing lie.How Sampling Works: The Hidden Levers

Text generation isn’t random. It’s a probability game. At each step, the model calculates a score (logit) for every possible next word. Then it turns those scores into probabilities using softmax. That’s where sampling comes in. It decides how to pick from those probabilities. There are four main methods:- Greedy decoding: Always picks the word with the highest probability. Predictable. Safe. Boring.

- Temperature sampling: Flattens or sharpens the probability curve. Low temperature = more confident picks. High temperature = wilder guesses.

- Top-k sampling: Only considers the top k most likely words. Cut off the long tail.

- Nucleus sampling (top-p): Picks from the smallest set of words whose combined probability adds up to p. Adaptive. Smarter than top-k.

Temperature: The First Dial You Should Adjust

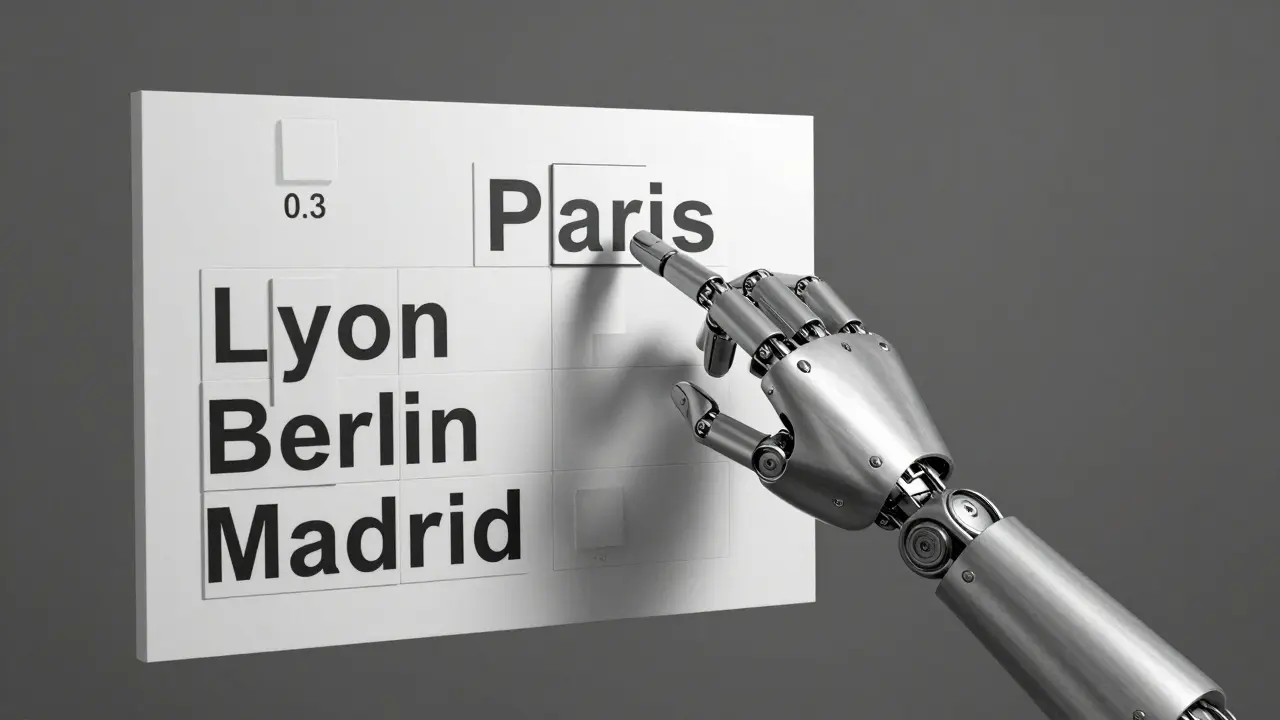

Temperature is the easiest setting to tweak. It scales the logits before softmax. At 0, it’s greedy decoding. At 1.0, everything’s equally likely. Most models default to 0.7-fine for chatbots, dangerous for fact-heavy tasks. Datadog’s 2024 study tested 14,900 prompts using HaluBench. When they dropped temperature from 0.7 to 0.3, hallucinations fell by 37%. Why? Lower temperature means the model ignores low-probability options. It doesn’t chase unlikely connections. It sticks to what’s most likely based on the input. Professor Andrew Ng’s 2025 course update recommends 0.2-0.5 for any task requiring factual accuracy. That’s not a suggestion-it’s a baseline. If you’re building a medical summary tool or a legal document analyzer, start here. But there’s a trade-off. Too low, and responses become robotic. Reddit user u/NLP_Newbie tried lowering temperature from 0.7 to 0.3 on a financial advice bot. Hallucinations dropped. But user satisfaction? Plummeted from 4.2 to 3.1 out of 5. People found the replies too stiff. Too safe.Top-k and Top-p: Cutting the Noise

Top-k limits the pool of candidate words to the k most probable ones. If k=100, you’re still letting in a lot of noise. If k=40? You cut out the weakest contenders. Raga AI found that reducing top-k from 100 to 40 cut factual errors by 28%. But here’s the catch: top-k doesn’t adapt. In a medical context, the top 50 words might include a bunch of irrelevant terms. In a creative writing prompt? You might need more flexibility. That’s where nucleus sampling (top-p) shines. Instead of fixing the number of words, you fix the probability sum. Say p=0.9. The model adds words to the pool until their combined probability hits 90%. So if the top 10 words cover 92%, it only uses those. If the top 200 words are needed to hit 90%? It uses all 200. Datadog’s February 2025 tests showed nucleus sampling at p=0.92 delivered 94.3% factual accuracy-better than top-k at k=50 (92.1%). Why? Because it’s context-aware. It doesn’t force a fixed number. It follows the data.

Real-World Benchmarks: What Actually Works?

Let’s cut through the theory. Here’s what the data says about real performance across common settings:| Method | Parameters | Factual Accuracy | Use Case Fit |

|---|---|---|---|

| Greedy Decoding | Temperature=0 | 98.7% | Low creativity, high risk of repetition |

| Temperature Sampling | 0.5 | 89.4% | General chat, moderate creativity |

| Top-k Sampling | k=50 | 92.1% | Good for structured tasks |

| Nucleus Sampling | p=0.92 | 94.3% | Best overall balance |

| Consortium Voting | Multiple models, p=0.92 | 96.5% | High-stakes domains only |

Domain Matters: One Size Doesn’t Fit All

You can’t use the same settings for a legal contract analyzer and a poetry generator. The data shows:- Medical & legal: Temperature 0.15-0.25, top-p 0.85-0.90. Even small hallucinations can be dangerous.

- Customer service: Temperature 0.3-0.4, top-p 0.90-0.93. Need clarity, but also some warmth.

- Creative writing: Temperature 0.7-0.9, top-p 0.95-0.98. You want surprise. You accept risk.

What’s Next? Automation Is Coming

Right now, tuning sampling parameters is manual. It takes weeks. You test, measure, tweak, repeat. But that’s changing. Google’s Gemma 3 (January 2025) introduced adaptive sampling. It detects if you’re asking for a fact or a story-and adjusts on the fly. OpenAI’s API now has "hallucination guardrails" that auto-lower temperature for RAG tasks. And Meta’s Llama 4 (planned Q2 2025) will monitor token-level confidence during generation and adjust sampling mid-response. Gartner predicts that by 2027, 90% of enterprise LLMs will use automated parameter tuning. The future isn’t engineers tweaking sliders. It’s models that know when to be cautious.

But Don’t Overcorrect

Dr. Emily Bender from the University of Washington warns: "Over-optimizing for accuracy can create new problems." A model that’s too rigid might give you a correct answer-but one that’s useless. "The capital of France?" "Paris." That’s accurate. But if the user asked for a travel guide? That’s not helpful. Hallucinations aren’t the only failure mode. Unhelpful, sterile, robotic outputs are just as bad. The goal isn’t zero hallucinations. It’s contextually appropriate responses.How to Start Optimizing Today

You don’t need a PhD. Here’s your starter plan:- Define your task: Is it factual? Creative? Legal? Medical?

- Start with Hugging Face’s baseline: Temperature=0.3, top-p=0.9, top-k=50.

- Test with real prompts: Use a small set of 50-100 real user queries. Measure hallucinations with a simple rule: "Does the answer match verified sources?"

- Adjust one parameter at a time: Try lowering temperature first. Then tweak top-p. Don’t change both at once.

- Check for stiffness: If users say responses feel "robotic" or "too short," raise temperature slightly.

Why This Matters More Than You Think

Gartner reports that 68% of companies now have official sampling guidelines. Fortune 500s are embedding them into AI governance. Why? Because hallucinations cost money. A financial chatbot giving wrong stock advice. A customer service bot inventing return policies. A medical summary misstating a drug interaction. In 2024, the global market for LLM optimization tools hit $2.4 billion. Sampling parameter management is now a core part of that. It’s not a niche tweak. It’s a production necessity. And here’s the truth: no amount of prompt engineering or RAG will fix bad sampling. If your generation parameters are loose, you’re building on sand. Fix the foundation first.What’s the best sampling setting to reduce hallucinations?

For most factual tasks, nucleus sampling (top-p) at p=0.92 with temperature=0.3 delivers the best balance of accuracy and fluency. It outperforms top-k and greedy decoding in real-world benchmarks. Start here, then adjust based on your domain.

Does lowering temperature make responses too robotic?

Yes, if you go too low. Temperature below 0.2 can make outputs stiff and repetitive. The trick is finding the lowest temperature that still gives you accurate answers without killing natural flow. Use a two-stage approach: generate facts at low temperature, then refine tone with a slight bump (e.g., 0.3 → 0.4).

Is top-k better than top-p for reducing hallucinations?

Top-p (nucleus sampling) is generally better. Top-k uses a fixed number of words, which can include irrelevant options in some contexts. Top-p adapts-it only picks from the smallest set of words that sum to your probability threshold. This makes it more context-sensitive and accurate, especially in complex domains.

Can I fix hallucinations just with better prompts?

No. Prompts help, but they don’t control the generation process. If your sampling parameters allow high randomness, even the best prompt can lead to hallucinations. Sampling settings are the final gatekeeper. You need both: good prompts and tight sampling.

Should I use consortium voting to eliminate hallucinations?

Only if you’re in high-stakes domains like healthcare or law. Consortium voting-averaging outputs from multiple model runs-can reduce hallucinations by 18-22 percentage points. But it triples compute cost. For most applications, it’s overkill. Use it only when accuracy must exceed 99%.

anoushka singh

February 11, 2026 AT 21:54Sandeepan Gupta

February 13, 2026 AT 01:41Tarun nahata

February 13, 2026 AT 10:00Aryan Jain

February 13, 2026 AT 16:15Nalini Venugopal

February 13, 2026 AT 17:05Pramod Usdadiya

February 15, 2026 AT 17:01Aditya Singh Bisht

February 15, 2026 AT 21:35