Black Seed USA AI Hub - Page 2

LLM Compression vs Model Switching: A Practical Guide for 2026

Learn when to compress large language models versus switching to smaller ones for optimal performance and cost. Discover real-world examples, benchmarks, and expert tips for deploying efficient AI systems in 2026.

Supervised Fine-Tuning for LLMs: A Practical Guide for Practitioners

A practical guide to implementing supervised fine-tuning for large language models, covering data preparation, hyperparameters, common pitfalls, and real-world examples to customize AI models effectively.

Security SLAs for Vibe-Coded Products: Patch Windows and Ownership

Vibe coding speeds up development but introduces severe security risks. Traditional patch windows are obsolete-critical flaws need fixes in hours, not days. Ownership is unclear, and runtime security is now essential. Learn how to build SLAs that actually work.

- 10

- Read More

Contact Center ROI from Generative AI: How Handle Time, CSAT, and First Contact Resolution Drive Real Savings

Generative AI is cutting contact center handle time by 20%, boosting CSAT by 18%, and increasing first contact resolution. Real companies are saving millions - here’s how.

- 10

- Read More

Benchmarking Compressed LLMs on Real-World Tasks: A Practical Guide

Learn how to properly benchmark compressed LLMs using ACBench, LLMCBench, and GuideLLM to avoid deployment failures. Real-world performance matters more than size or speed.

Latency Management for RAG Pipelines in Production LLM Systems

Learn how to cut RAG pipeline latency from 5 seconds to under 1.5 seconds using Agentic RAG, streaming, connection pooling, and approximate search. Real-world benchmarks, tools, and tradeoffs for production LLM systems.

GDPR and CCPA Compliance in Vibe-Coded Systems: Data Mapping and Consent Flows

GDPR and CCPA require detailed data mapping and transparent consent flows-especially in vibe-coded systems that rely on user behavior. Learn what you must document, how to map data flows, and why automated tools aren't enough without human oversight.

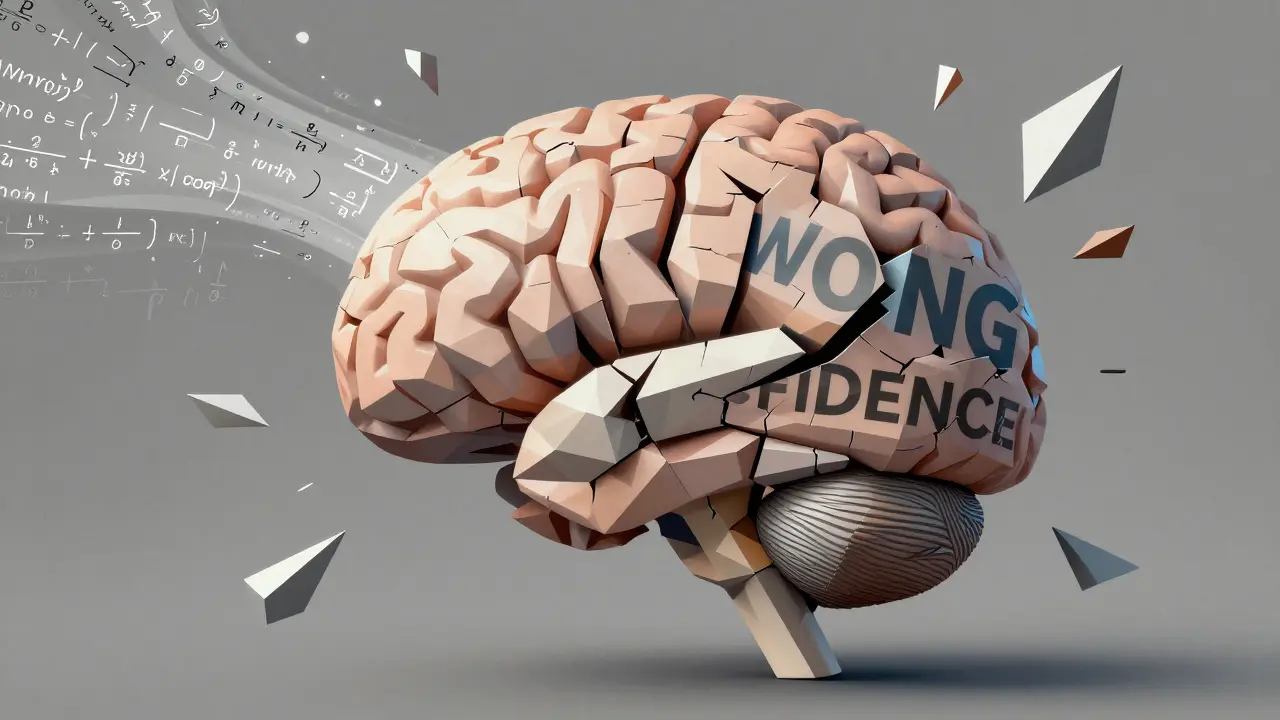

Error Messages and Feedback Prompts That Help LLMs Self-Correct

Learn how to use feedback prompts to help LLMs self-correct their own errors - when it works, when it fails, and how to implement it without falling into overconfidence traps.

How Generative AI Boosts Revenue Through Cross-Sell, Upsell, and Conversion Lifts

Generative AI is driving measurable revenue growth through smarter cross-sell and upsell strategies, with top companies seeing 18%+ increases in average order value and conversion lifts up to 20%. Learn how it works-and how to make it work for you.

- 10

- Read More

Vision-Language Models That Read Diagrams and Generate Architecture

Vision-language models now read architectural diagrams and generate documentation, code, and design insights. Learn how they work, where they excel, their limitations, and how to use them safely in real software teams.

Bias-Aware Prompt Engineering to Improve Fairness in Large Language Models

Bias-aware prompt engineering helps reduce unfair outputs in large language models by changing how you ask questions-not by retraining the model. Learn proven techniques, real results, and how to start today.

Team Collaboration in Cursor and Replit: Shared Context and Reviews Compared

Cursor and Replit offer very different approaches to team collaboration: Replit excels at real-time, browser-based coding for learning and prototyping, while Cursor delivers deep codebase awareness and secure, Git-first reviews for enterprise teams.