Black Seed USA AI Hub - Page 3

Knowledge Boundaries in Large Language Models: How AI Knows When It Doesn't Know

Large language models often answer confidently even when they're wrong. Learn how AI systems are learning to recognize their own knowledge limits and communicate uncertainty to reduce hallucinations and build trust.

Data Retention Policies for Vibe-Coded SaaS: What to Keep and Purge

Vibe-coded SaaS apps often collect too much user data by default. Learn what to keep, what to purge, and how to build compliance into your AI prompts to avoid fines and build trust.

- 10

- Read More

Agentic Systems vs Vibe Coding: How to Pick the Right AI Autonomy for Your Project

Agentic systems automate coding tasks with minimal human input, while vibe coding lets you build fast with conversational AI. Learn which approach fits your project-and how to use both safely in 2026.

- 10

- Read More

Security Code Review for AI Output: Essential Checklists for Verification Engineers

AI-generated code is often functional but insecure. Verification engineers need specialized checklists to catch hidden vulnerabilities like missing input validation, hardcoded secrets, and insecure error handling. Learn the top patterns, tools, and steps to secure AI code today.

Style Transfer Prompts in Generative AI: Control Tone, Voice, and Format Like a Pro

Learn how to use style transfer prompts in generative AI to control tone, voice, and format-without losing meaning. Get practical steps, real-world examples, and pro tips for marketing and content teams.

- 10

- Read More

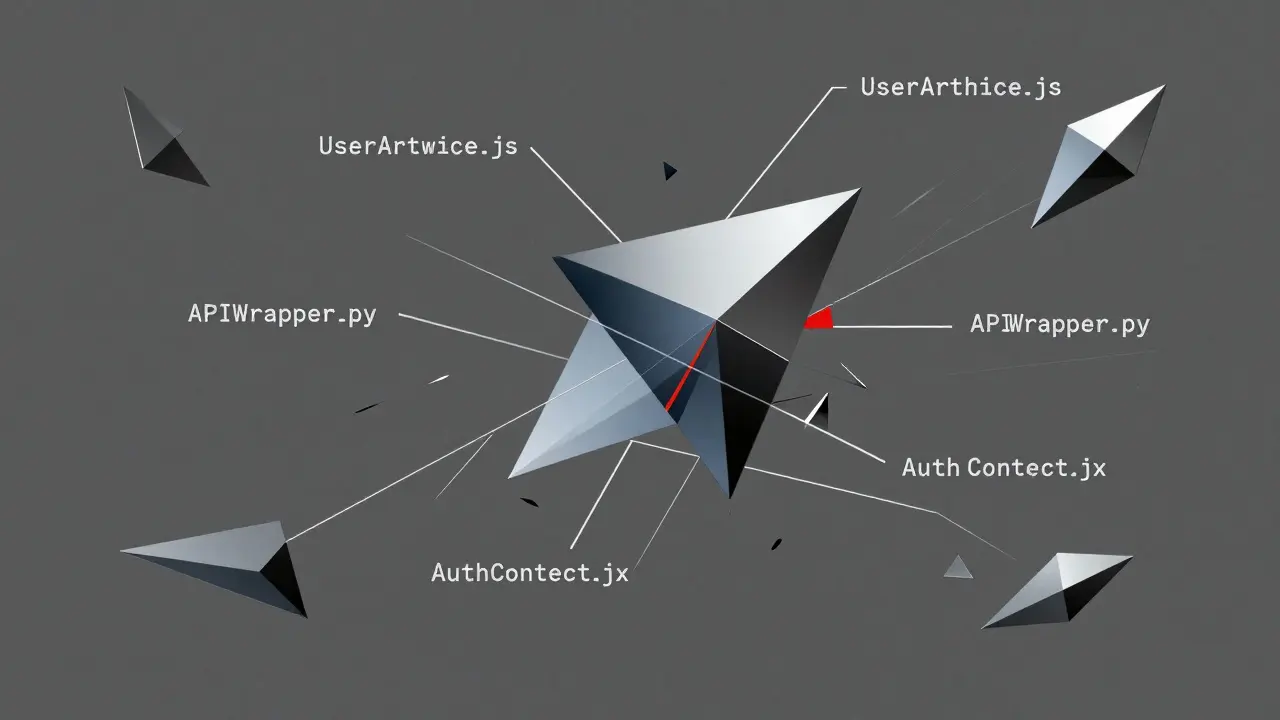

Prompt Chaining for Multi-File Refactors in Version-Controlled Repositories

Prompt chaining lets you safely refactor code across multiple files using AI, reducing errors by 68% compared to single prompts. Learn how to use it with LangChain, Autogen, and version control.

Guarded Tool Access: How to Sandbox External Actions in LLM Agents for Real-World Security

Sandboxing LLM agents is no longer optional-untrusted tool access can leak data even with perfect prompt filters. Learn how Firecracker, gVisor, Nix, and WASM lock down agents to prevent breaches.

Secure Defaults in Vibe Coding: How CSP, HTTPS, and Security Headers Protect AI-Generated Apps

Secure defaults in vibe coding - CSP, HTTPS, and security headers - are critical to protect AI-generated apps from attacks. Learn why platforms like Replit lead in security and how to fix common vulnerabilities before they're exploited.

- 10

- Read More

Security Telemetry and Alerting for AI-Generated Applications: What You Need to Know

AI-generated apps behave differently than traditional software. Learn how security telemetry tracks model behavior, detects prompt injections, and reduces false alerts-without relying on outdated tools.

Safety in Multimodal Generative AI: How Content Filters Block Harmful Images and Audio

Multimodal AI can generate images and audio from text-but harmful content slips through filters. Learn how companies are blocking dangerous outputs, the hidden threats in images and audio, and what you need to know before using these systems.

Impact Assessments for Generative AI: DPIAs, AIA Requirements, and Templates

Generative AI requires strict impact assessments under GDPR and the EU AI Act. Learn what DPIAs and FRIAs are, who needs them, how to use templates, and what happens if you skip them.

Agent-Oriented Large Language Models: Planning, Tools, and Autonomy

Agent-oriented large language models go beyond answering questions-they plan, use tools, and act autonomously. Learn how they work, where they're used, and why they're changing AI forever.

- 10

- Read More